Yoav Goldberg

large language models

language models

bias

interpretation

evaluation

llms

theory of mind

emergence

analysis

reading comprehension

transformers

concepts

dataset

coreference resolution

shortcuts

41

presentations

45

number of views

1

citations

SHORT BIO

Yoav is an associate professor of computer science at Bar Ilan University, and also the research director of AI2 Israel. His research interests include language understanding technologies with real world applications, combining symbolic and neural representations, uncovering latent information in text, syntactic and semantic processing, and interpretability and foundational understanding of deep learning models for text and sequences. He authored a textbook on deep learning techniques for natural language processing, and was among the IEEE's AI Top 10 to Watch in 2018, and a recipient of the Krill Prize in Science in 2017. He is also a recipient of multiple best-paper and outstanding awards at major NLP conferences. He received his Ph.D. in Computer Science from Ben Gurion University, and spent time in Google Research as a post-doc.

Presentations

Data-driven Coreference-based Ontology Building

Shir Ashury Tahan and 4 other authors

Leveraging Collection-Wide Similarities for Unsupervised Document Structure Extraction

Gili Lior and 2 other authors

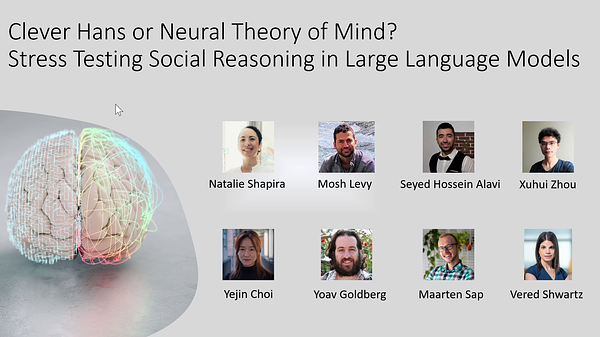

Clever Hans or Neural Theory of Mind? Stress Testing Social Reasoning in Large Language Models

Natalie Shapira and 7 other authors

NERetrieve: Dataset for Next Generation Named Entity Recognition and Retrieval

Uri Katz and 3 other authors

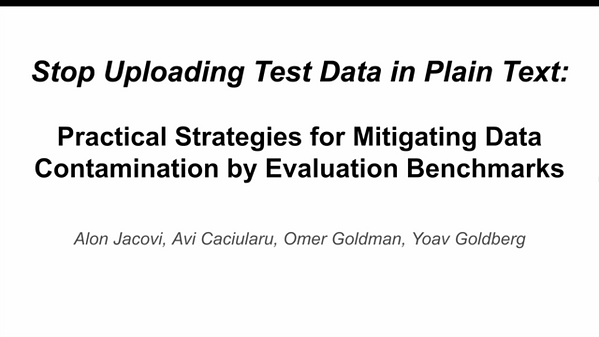

Stop Uploading Test Data in Plain Text: Practical Strategies for Mitigating Data Contamination by Evaluation Benchmarks

Alon Jacovi and 3 other authors

Guiding LLM to Fool Itself: Automatically Manipulating Machine Reading Comprehension Shortcut Triggers

Mosh Levy and 2 other authors

Guiding LLM to Fool Itself: Automatically Imbuing Multiple Shortcut Triggers in Question Answering Samples

Mosh Levy and 2 other authors

How Well do Large Language Models Perform on Faux Pas Tests?

Natalie Shapira and 2 other authors

Neighboring Words Affect Human Interpretation of Saliency Explanations

Hendrik Schuff and 4 other authors

Conjunct Resolution in the Face of Verbal Omissions

Royi Rassin and 2 other authors

Linear Guardedness and its Implications

Shauli Ravfogel and 2 other authors

Conformal Nucleus Sampling

Shauli Ravfogel and 2 other authors

How Well Do Large Language Models Perform on Faux Pas Tests?

Natalie Shapira and 2 other authors

Understanding Transformer Memorization Recall Through Idioms

Adi Haviv and 5 other authors

LingMess: Linguistically Informed Multi Expert Scorers for Coreference Resolution

Shon Otmazgin and 2 other authors

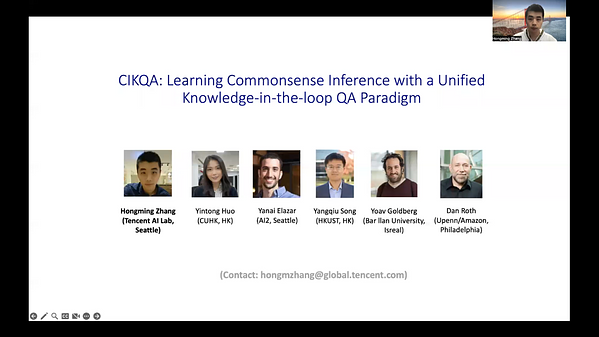

CIKQA: Learning Commonsense Inference with a Unified Knowledge-in-the-loop QA Paradigm

Hongming Zhang and 5 other authors