Yejin Choi

generation

commonsense

large language models

evaluation

summarization

dataset

text generation

distillation

robustness

reasoning

benchmark

language model

dialogue

natural language processing

factuality

73

presentations

230

number of views

2

citations

SHORT BIO

Yejin Choi is Brett Helsel professor at the Paul G. Allen School of Computer Science & Engineering at the University of Washington and also a senior research manager at AI2 overseeing the project Mosaic. Her research investigates a wide variety problems across NLP and AI including commonsense knowledge and reasoning, neural language (de-)generation, language grounding with vision and experience, and AI for social good. She is a co-recipient of the ACL Test of Time award in 2021, the CVPR Longuet-Higgins Prize (test of time award) in 2021, a NeurIPS Outstanding Paper Award in 2021, the AAAI Outstanding Paper Award in 2020, the Borg Early Career Award (BECA) in 2018, the inaugural Alexa Prize Challenge in 2017, IEEE AI's 10 to Watch in 2016, and the ICCV Marr Prize (best paper award) in 2013. She received her Ph.D. in Computer Science at Cornell University and BS in Computer Science and Engineering at Seoul National University in Korea.

Presentations

Perceptions to Beliefs: Exploring Precursory Inferences for Theory of Mind in Large Language Models

Chani Jung and 7 other authors

How to Train Your Fact Verifier: Knowledge Transfer with Multimodal Open Models

이재영 and 7 other authors

Modular Pluralism: Pluralistic Alignment via Multi-LLM Collaboration

Shangbin Feng and 6 other authors

CopyBench: Measuring Literal and Non-Literal Reproduction of Copyright-Protected Text in Language Model Generation

Tong Chen and 8 other authors

Symbolic Working Memory Enhances Language Models for Complex Rule Application

Siyuan Wang and 3 other authors

In Search of the Long-Tail: Systematic Generation of Long-Tail Inferential Knowledge via Logical Rule Guided Search

Huihan Li and 9 other authors

WildVis: Open Source Visualizer for Million-Scale Chat Logs in the Wild

Yuntian Deng and 5 other authors

StyleRemix: Interpretable Authorship Obfuscation via Distillation and Perturbation of Style Elements

Jillian Fisher and 5 other authors

Selective “Selective Prediction”: Reducing Unnecessary Abstention in Vision-Language Reasoning

Tejas Srinivasan and 6 other authors

Agent Lumos: Unified and Modular Training for Open-Source Language Agents

Da Yin and 6 other authors

Selective "Selective Prediction": Reducing Unnecessary Abstention in Vision-Language Reasoning

Tejas Srinivasan and 6 other authors

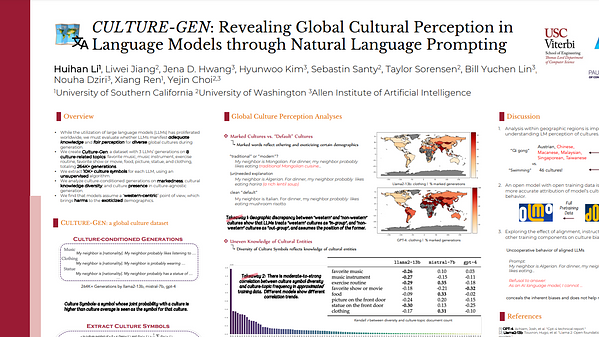

CULTURE-GEN: Natural Language Prompts Reveal Uneven Culture Presence in Language Models

Huihan Li and 4 other authors

JAMDEC: Unsupervised Authorship Obfuscation using Constrained Decoding over Small Language Models

Jillian Fisher and 5 other authors

MacGyver: Are Large Language Models Creative Problem Solvers?

Yufei Tian and 8 other authors

Impossible Distillation for Paraphrasing and Summarization: How to Make High-quality Lemonade out of Small, Low-quality Model

Jaehun Jung and 7 other authors

Clever Hans or Neural Theory of Mind? Stress Testing Social Reasoning in Large Language Models

Natalie Shapira and 7 other authors