Xuezhi Wang

large language models

commonsense reasoning

style

structure

adapter

sequence generation

parameter efficient learning

open-ended generations

analysis & evaluation

prompt constraints

continual learning

self training

5

presentations

17

number of views

SHORT BIO

Xuezhi Wang is a Research Scientist at Google Brain. Her primary interests are robustness and fairness in NLP models, and enabling systematic generalization in language models. Xuezhi received her PhD degree from the Computer Science Department in Carnegie Mellon University in 2016.

Presentations

Large Language Models Can Self-Improve | VIDEO

Jiaxin Huang and 6 other authors

Bounding the Capabilities of Large Language Models in Open Text Generation with Prompt Constraints

Albert Lu and 4 other authors

Measure and Improve Robustness in NLP Models: A Survey

Xuezhi Wang and 2 other authors

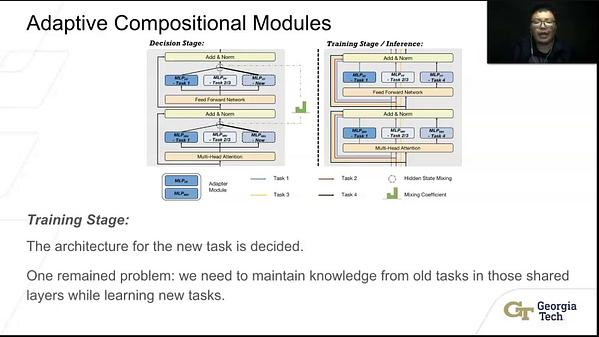

Continual Sequence Generation with Adaptive Compositional Modules

Yanzhe Zhang and 2 other authors

Continual Learning for Text Classification with Information Disentanglement Based Regularization

Yufan Huang and 4 other authors