Xiang Ren

large language models

robustness

in-context learning

commonsense reasoning

explainability

information extraction

explanation-based learning

few-shot learning

knowledge

dataset

benchmarking

generation

prompts

language model

commonsense

57

presentations

130

number of views

SHORT BIO

I'm an Associate Professor in Computer Science and the Andrew and Erna Viterbi Early Career Chair at USC, where I'm the PI of the Intelligence and Knowledge Discovery (INK) Research Lab. I also hold appointment as a Research Team Leader in Information Sciences Institute (ISI) and serve as a member of the USC NLP Group, USC Machine Learning Center and ISI Center on Knowledge Graphs. Outside of my USC work, I spend time at Allen Institute for AI (AI2) working on machine common sense. Previously I was a Data Science Advisor at Snapchat. Prior to USC, I did my PhD work in computer science at UIUC. I've also spent time with the NLP group and the SNAP group at the Stanford University.

Presentations

Symbolic Working Memory Enhances Language Models for Complex Rule Application

Siyuan Wang and 3 other authors

In Search of the Long-Tail: Systematic Generation of Long-Tail Inferential Knowledge via Logical Rule Guided Search

Huihan Li and 9 other authors

WildVis: Open Source Visualizer for Million-Scale Chat Logs in the Wild

Yuntian Deng and 5 other authors

Are Machines Better at Complex Reasoning? Unveiling Human-Machine Inference Gaps in Entailment Verification

Soumya Sanyal and 4 other authors

Relying on the Unreliable: The Impact of Language Models’ Reluctance to Express Uncertainty

Kaitlyn Zhou and 3 other authors

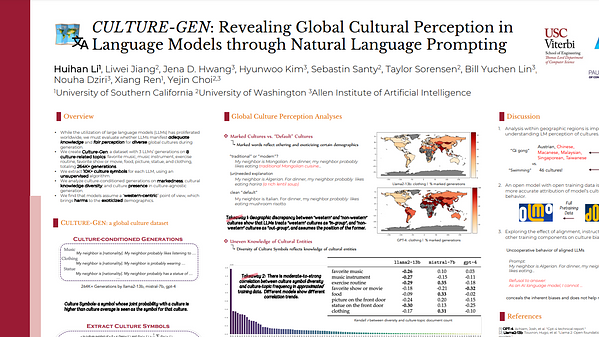

CULTURE-GEN: Natural Language Prompts Reveal Uneven Culture Presence in Language Models

Huihan Li and 4 other authors

Backdooring Instruction-Tuned Large Language Models with Virtual Prompt Injection

Jun Yan and 8 other authors

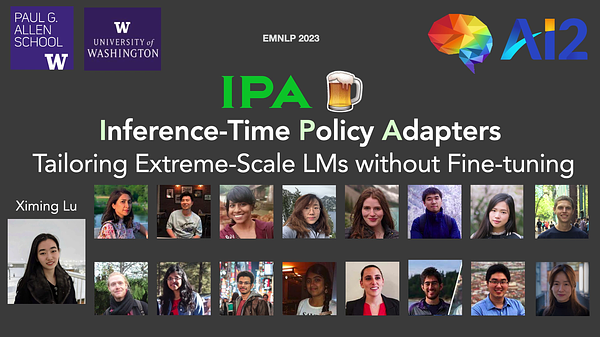

Inference-Time Policy Adapters (IPA): Tailoring Extreme-Scale LMs without Fine-tuning | VIDEO

Ximing Lu and 16 other authors

Exploring Distributional Shifts in Large Language Models for Code Analysis

Shushan Arakelyan and 3 other authors

Estimating Large Language Model Capabilities without Labeled Test Data

Harvey Fu and 4 other authors

How Predictable Are Large Language Model Capabilities? A Case Study on BIG-bench

Qinyuan Ye and 3 other authors

How Predictable Are Large Language Model Capabilities? A Case Study on BIG-bench

Qinyuan Ye and 3 other authors

Estimating Large Language Model Capabilities without Labeled Test Data

Harvey Fu and 4 other authors

BITE: Textual Backdoor Attacks with Iterative Trigger Injection

Jun Yan and 2 other authors

FiD-ICL: A Fusion-in-Decoder Approach for Efficient In-Context Learning

Qinyuan Ye and 4 other authors

Are Machine Rationales (Not) Useful to Humans? Measuring and Improving Human Utility of Free-text Rationales

Brihi Joshi and 8 other authors