Nanyun Peng

Associate Professor @ UCLA

event extraction

large language models

dataset

evaluation

information extraction

question answering

paraphrasing

story generation

commonsense

natural language processing

benchmark

data augmentation

natural language generation

factuality

event argument extraction

66

presentations

55

number of views

1

citations

SHORT BIO

Nanyun is an Assistant Professor at the Computer Science Department, University of California, Los Angeles. Her research goals aim to build robust and generalizable Natural Language Processing (NLP) tools that lower the communication barriers and enable AI agents to become companions for humans. With these goals in mind, she has been focusing on several research topics, including creative language generation, low-resource information extraction, and zero-shot cross-lingual transfer. She got her PhD in Computer Science at Johns Hopkins University, Center for Language and Speech Processing, after that, she spent three awesome years at University of Southern California as a Research Assistant Professor at the Computer Science Department, and a Research Lead at the Information Sciences Institute. She has backgrounds in Linguistics and Economics and held BAs in both.

Presentations

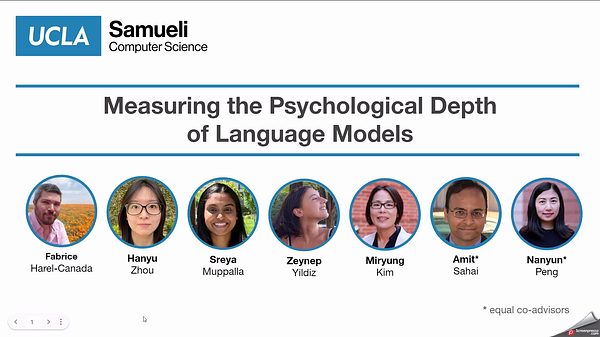

Measuring Psychological Depth in Language Models

Fabrice Harel-Canada and 6 other authors

Evaluating LLMs' Capability in Satisfying Lexical Constraints

Bingxuan Li and 4 other authors

SPEED++: A Multilingual Event Extraction Framework for Epidemic Prediction and Preparedness

Tanmay Parekh and 8 other authors

VDebugger: Harnessing Execution Feedback for Debugging Visual Programs

Xueqing Wu and 6 other authors

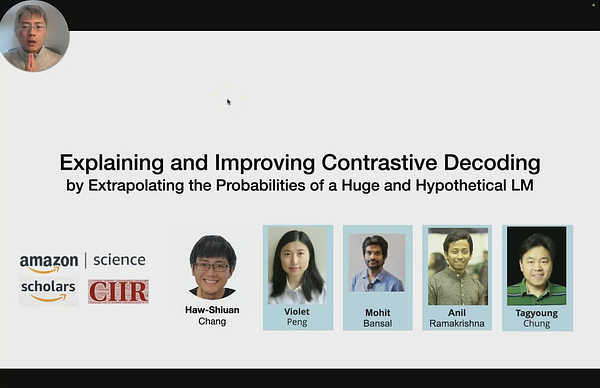

Explaining and Improving Contrastive Decoding by Extrapolating the Probabilities of a Huge and Hypothetical LM

Haw-Shiuan Chang and 4 other authors

QUDSELECT: Selective Decoding for Questions Under Discussion Parsing

Ashima Suvarna and 4 other authors

Re-ReST: Reflection-Reinforced Self-Training for Language Agents

Zi-Yi Dou and 4 other authors

Model Editing Harms General Abilities of Large Language Models: Regularization to the Rescue

Jia-Chen Gu and 6 other authors

Synchronous Faithfulness Monitoring for Trustworthy Retrieval-Augmented Generation

Di Wu and 4 other authors

LLM Self-Correction with DeCRIM: Decompose, Critique, and Refine for Enhanced Following of Instructions with Multiple Constraints

Thomas Palmeira Ferraz and 9 other authors

LLM-A*: Large Language Model Enhanced Incremental Heuristic Search on Path Planning

Silin Meng and 4 other authors

Tracking the Newsworthiness of Public Documents

Alexander Spangher and 5 other authors

PhonologyBench: Evaluating Phonological Skills of Large Language Models

Ashima Suvarna and 2 other authors

Argument-Aware Approach To Event Linking

I-Hung Hsu and 6 other authors

CaLM: Contrasting Large and Small Language Models to Verify Grounded Generation

I-Hung Hsu and 6 other authors

Improving Event Definition Following For Zero-Shot Event Detection

Zefan Cai and 8 other authors