Leshem Choshen

MIT, MIT-IBM

prompting

machine translation

evaluation

merging

fusing

language models

machine learning

syntax

bias mitigation

dataset

question answering

fairness

text classification

creative writing

generalization

18

presentations

15

number of views

30

citations

SHORT BIO

Leshem Choshen is a postdoctoral researcher at MIT-IBM, aiming to collaboratively pretrain through model recycling, efficient evaluation, and efficient pretraining research (e.g., babyLM). He received the postdoctoral Rothschild and Fulbright fellowship as well as IAAI and Blavatnik best Ph.D. awards. With broad NLP and ML interests, he also worked on Reinforcement Learning, Evaluation and Understanding of how neural networks learn. In parallel, he participated in Project Debater, creating a machine that could hold a formal debate, ending in a Nature cover and live debate. He is also a dancer and runs tei.ma, a food and science blog (NisuiVeTeima on Instagram, Facebook and Tiktok).

Presentations

NumeroLogic: Number Encoding for Enhanced LLMs' Numerical Reasoning

Eli Schwartz and 5 other authors

Fuse to Forget: Bias Reduction and Selective Memorization through Model Fusion

Kerem Zaman and 2 other authors

Label-Efficient Model Selection for Text Generation

Shir Ashury Tahan and 5 other authors

Unitxt: Flexible, Shareable and Reusable Data Preparation and Evaluation for Generative AI

Elron Bandel and 11 other authors

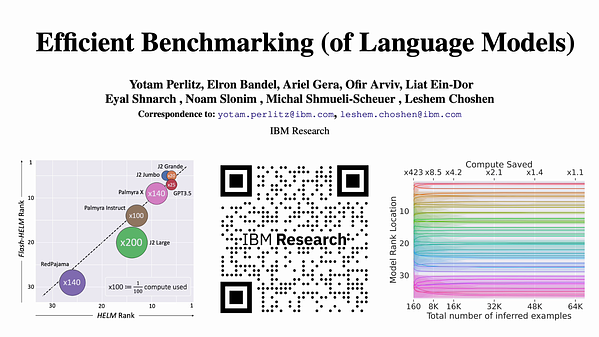

Efficient Benchmarking (of Language Models)

Yotam Perlitz and 8 other authors

Where to start? Analyzing the potential value of intermediate models

Leshem Choshen and 4 other authors

Human Learning by Model Feedback: The Dynamics of Iterative Prompting with Midjourney

Shachar Don-Yehiya and 2 other authors

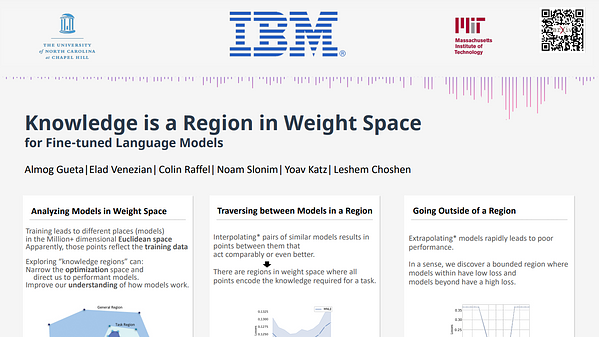

Knowledge is a Region in Weight Space for Fine-tuned Language Models

Leshem Choshen

DisentQA: Disentangling Parametric and Contextual Knowledge with Counterfactual Question Answering

Ella Neeman and 5 other authors

PreQuEL: Quality Estimation of Machine Translation Outputs in Advance

Shachar Don-Yehiya and 2 other authors

On Neurons Invariant to Sentence Structural Changes in Neural Machine Translation

Gal Patel and 2 other authors

Enhancing the Transformer Decoder with Transition-based Syntax

Leshem Choshen

Reinforcement Learning with Large Action Spaces for Neural Machine Translation

Asaf Yehudai and 3 other authors

Cluster & Tune: Boost Cold Start Performance in Text Classification

Eyal Shnarch and 6 other authors

The Grammar-Learning Trajectories of Neural Language Models

Leshem Choshen and 3 other authors

Evaluating Factual Consistency in Knowledge-Grounded Dialogues via Question Generation and Question Answering

Or Honovich and 5 other authors