Iz Beltagy

Research Scientist @ Allen Institute for AI

in-context learning

meta-learning

multi-task learning

analysis

zero-shot

few-shot learning

few-shot

meaning

prompting

architecture

scaling

llm

transformer

fusion-in-decoder

language models

14

presentations

144

number of views

SHORT BIO

Senior Research Scientist at Allen Institute for Artificial Intelligence (AI2) in the AllenNLP team, co-chair of the architecture & scaling working group in Big Science.

Presentations

Z-ICL: Zero-Shot In-Context Learning with Pseudo-Demonstrations

Xinxi Lyu and 4 other authors

Transparency Helps Reveal When Language Models Learn Meaning

Zhaofeng Wu and 4 other authors

FiD-ICL: A Fusion-in-Decoder Approach for Efficient In-Context Learning

Qinyuan Ye and 4 other authors

Continued Pretraining for Better Zero- and Few-Shot Promptability

Zhaofeng Wu and 6 other authors

Few-Shot Self-Rationalization with Natural Language Prompts

Ana Marasovic and 3 other authors

What Language Model to Train if You Have One Million GPU Hours?

Julien Launay and 1 other author

Zero- and Few-Shot NLP with Pretrained Language Models - Pretraining considerations for zero/few-shot

Iz Beltagy

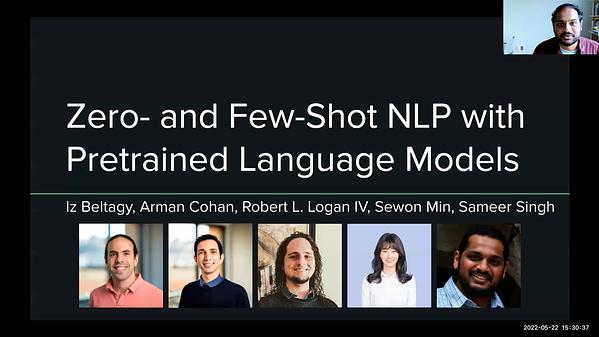

Zero- and Few-Shot NLP with Pretrained Language Models Part 1

Arman Cohan and 4 other authors

Zero- and Few-Shot NLP with Pretrained Language Models Part 2

Arman Cohan and 4 other authors

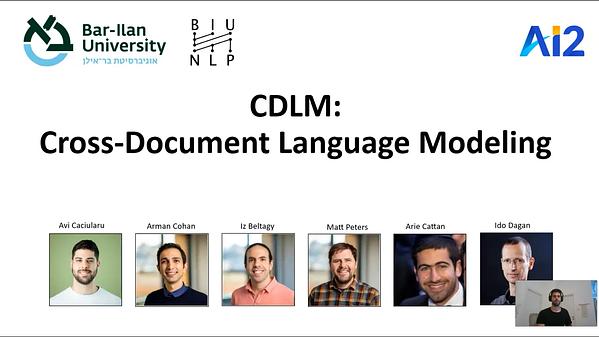

CDLM: Cross-Document Language Modeling

Avi Caciularu and 5 other authors

MS2: Multi-Document Summarization of Medical Studies

Jay DeYoung and 2 other authors

Multi-Document Summarization of Medical Studies

Jay DeYoung and 2 other authors

CDLM: Cross-Document Language Modeling

Avi Caciularu and 5 other authors

A Dataset of Information-Seeking Questions and Answers Anchored in Research Papers

Pradeep Dasigi and 5 other authors