Ari Holtzman

language model

generation

text generation

few-shot learning

contrastive

language modeling

language grounding

neuro-symbolic

embodied ai

paraphrasing

decoding

infilling

unsupervised

in-context learning

11

presentations

26

number of views

SHORT BIO

I work on understanding what Language Models do, what we actually want out of Natural Language Generation, and what core concepts and metaphors we are missing to explain and opertionalize our intentions in these domains clearly. I am a PhD student at the University of Washington advised by Luke Zettlemoyer.

Presentations

Contrastive Decoding: Open-ended Text Generation as Optimization

Xiang Lisa Li and 7 other authors

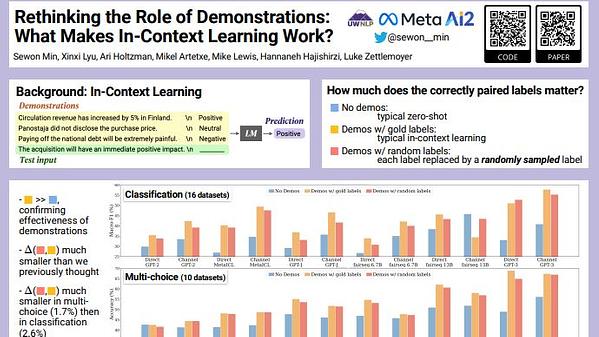

Rethinking the Role of Demonstrations: What Makes In-Context Learning Work?

Sewon Min and 6 other authors

DEMix Layers: Disentangling Domains for Modular Language Modeling

Suchin Gururangan and 3 other authors

Surface Form Competition: Why the Highest Probability Answer Isn’t Always Right

Ari Holtzman and 4 other authors

CLIPScore: A Reference-free Evaluation Metric for Image Captioning

Jack Hessel and 4 other authors

CLIPScore: A Reference-free Evaluation Metric for Image Captioning

Jack Hessel and 4 other authors

Surface Form Competition

Ari Holtzman and 4 other authors

PIGLeT: Language Grounding Through Neuro-Symbolic Interaction in a 3D World

Yejin Choi and 5 other authors

Reflective Decoding: Beyond Unidirectional Generation with Off-the-Shelf Language Models

Peter West and 5 other authors

TuringAdvice: A Generative and Dynamic Evaluation of Language Use

Rowan Zellers and 5 other authors

MultiTalk: A Highly-Branching Dialog Testbed for Diverse Conversations

Yao Dou and 3 other authors