Aaron Mueller

Graduate student @ Johns Hopkins University

syntax

interpretability

inductive bias

language models

pre-training

classification

story generation

few-shot

gpt-2

language model

sequence-to-sequence

diverse decoding

human evaluation

narrative generation

hierarchy

7

presentations

11

number of views

SHORT BIO

Aaron Mueller is a recent PhD graduate in computer science at Johns Hopkins University’s Center for Language & Speech Processing, and will soon be a post-doctoral researcher advised by Yonatan Belinkov and David Bau. His work centers on natural language processing (NLP), interpreting the decisions of NLP systems, and evaluating neural language models. Outside of Johns Hopkins, Aaron has researched efficient NLP during internships at Amazon Web Services and Meta, and has previously conducted research at the University of Massachusetts Amherst and New York University.

Presentations

Language model acceptability judgements are not always robust to context

Koustuv Sinha and 6 other authors

How to Plant Trees in Language Models: Data and Architectural Effects on the Emergence of Syntactic Inductive Biases

Aaron Mueller and 1 other author

Causal Analysis of Syntactic Agreement Neurons in Multilingual Language Models

Aaron Mueller and 2 other authors

Coloring the Blank Slate: Pre-training Imparts a Hierarchical Inductive Bias to Sequence-to-sequence Models

Aaron Mueller and 4 other authors

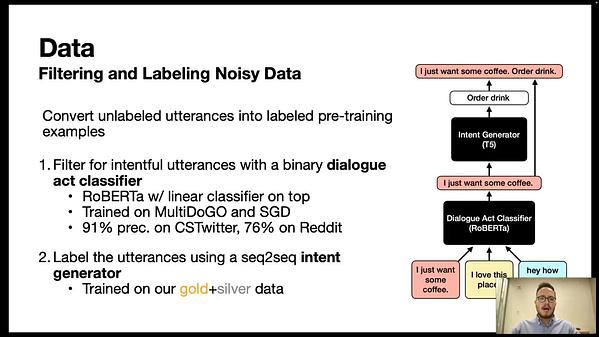

Label Semantic Aware Pre-training for Few-shot Text Classification

Aaron Mueller and 6 other authors

Causal Analysis of Syntactic Agreement Mechanisms in Neural Language Models

Matthew Finlayson and 5 other authors

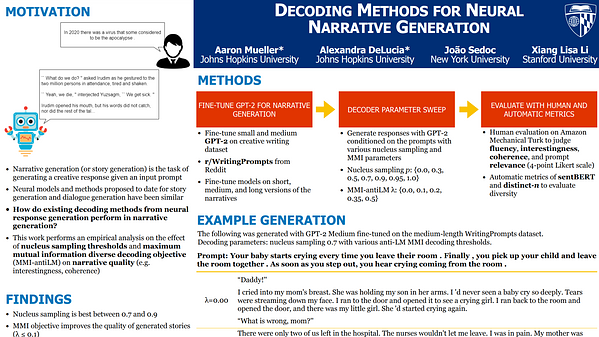

Decoding Methods for Neural Narrative Generation

Alexandra DeLucia and 3 other authors