Chuhan Wu

knowledge distillation

pre-trained language model

multi-teacher knowledge distillation

news recommendation

ranking

efficiency

alignment

long document modeling

hierarchical transformer

efficient transformer

personalized news recommendation; user interest; hierarchical interest tree

news recommendation; news popularity; user interest

finetuning

recall

web search

14

presentations

5

number of views

SHORT BIO

Chuhan Wu is now a Ph.D. candidate with the Department of Electronic Engineering at Tsinghua University, Beijing, China. His current research interests include recommender systems, user modeling and social media mining.

Presentations

Learning to Edit: Aligning LLMs with Knowledge Editing

Yuxin Jiang and 11 other authors

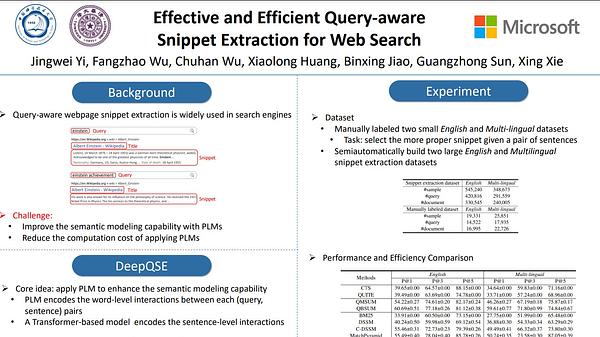

Effective and Efficient Query-aware Snippet Extraction for Web Search

Jingwei Yi and 6 other authors

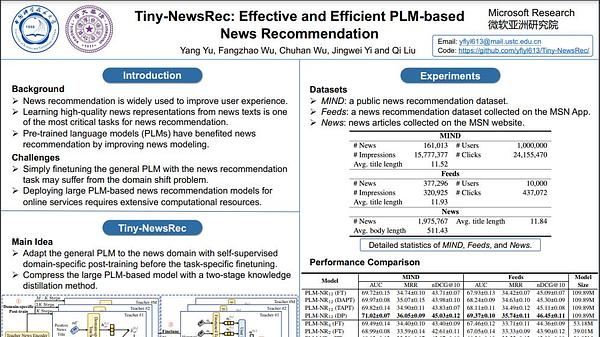

Tiny-NewsRec: Effective and Efficient PLM-based News Recommendation

Yang Yu and 4 other authors

Two Birds with One Stone: Unified Model Learning for Both Recall and Ranking in News Recommendation

Chuhan Wu and 3 other authors

NoisyTune: A Little Noise Can Help You Finetune Pretrained Language Models Better

Chuhan Wu and 3 other authors

Efficient-FedRec: Efficient Federated Learning Framework for Privacy-Preserving News Recommendation

Jingwei Yi and 5 other authors

Uni-FedRec: A Unified Privacy-Preserving News Recommendation Framework for Model Training and Online Serving

Tao Qi and 4 other authors

NewsBERT: Distilling Pre-trained Language Model for Intelligent News Application

Chuhan Wu and 5 other authors

One Teacher is Enough? Pre-trained Language Model Distillation from Multiple Teachers

Chuhan Wu and 2 other authors

One Teacher is Enough? Pre-trained Language Model Distillation from Multiple Teachers

Chuhan Wu and 2 other authors

HieRec: Hierarchical User Interest Modeling for Personalized News Recommendation

Tao Qi and 6 other authors

PP-Rec: News Recommendation with Personalized User Interest and Time-aware News Popularity

Tao Qi and 4 other authors

Hi-Transformer: Hierarchical Interactive Transformer for Efficient and Effective Long Document Modeling

Chuhan Wu and 3 other authors

DA-Transformer: Distance-aware Transformer

Chuhan Wu and 2 other authors