Yang Liu

summarization

large language models

question answering

information retrieval

few-shot learning

language modeling

news

prompting

text summarization

segmentation

parameter-efficient fine-tuning

instructions

prompt tuning

in-context learning

prompt learning

10

presentations

2

number of views

SHORT BIO

Yang Liu is an Senior Researcher at Microsoft. Previously, Yang obtained his PhD from University of Edinburgh, working with Prof. Mirella Lapata. His research interests include text summarization, text generation and strutrured learning, aiming to help human better understand and digest long documents.

Presentations

PEARL: Prompting Large Language Models to Plan and Execute Actions Over Long Documents

Simeng Sun and 5 other authors

Auto-Instruct: Automatic Instruction Generation and Ranking for Black-Box Language Models

Zhihan Zhang and 8 other authors

UniSumm and SummZoo: Unified Model and Diverse Benchmark for Few-Shot Summarization

Yulong Chen and 6 other authors

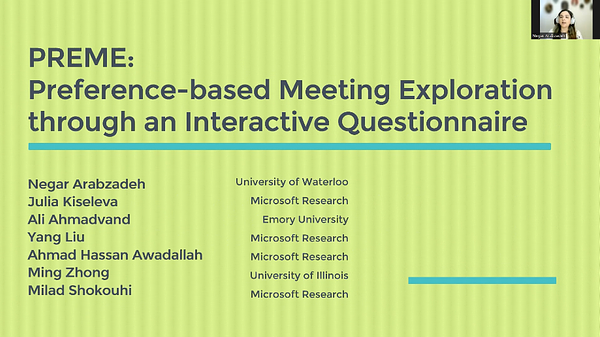

PREME: Preference-based Meeting Exploration through an Interactive Questionnaire

Negar Arabzadeh and 6 other authors

Training Data is More Valuable than You Think: A Simple and Effective Method by Retrieving from Training Data

Shuohang Wang and 7 other authors

DialogLM: Pre-Trained Model for Long Dialogue Understanding and Summarization

Ming Zhong and 4 other authors

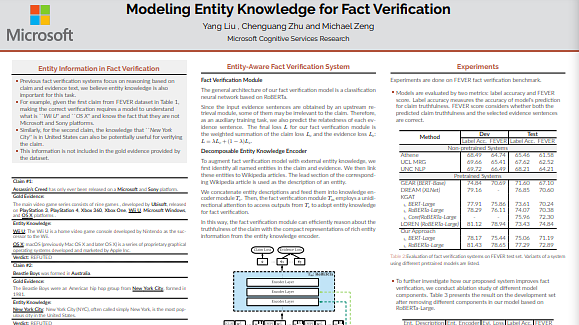

Modeling Entity Knowledge for Fact Verification

Yang Liu and 2 other authors

MediaSum: A Large-scale Media Interview Dataset for Dialogue Summarization

Chenguang Zhu and 3 other authors

Noisy Self-Knowledge Distillation for Text Summarization

Yang Liu and 2 other authors

How Does In-Context Learning Help Prompt Tuning?

Simeng Sun and 4 other authors