Hanjie Chen

few-shot

post-hoc explanations

interpretability

graphs

stability

interactions

explainable nlp

pre-trained

pathologies

output probability

free-text rationales

information-theoretic evaluation

conditional v-information

6

presentations

10

number of views

SHORT BIO

Hanjie Chen recently obtained her Ph.D. in computer science at the University of Virginia. Her research interests lie in Trustworthy AI, Natural Language Processing (NLP), and Interpretable Machine Learning. She aims to develop explainable AI techniques that are easily accessible to system developers and end users for building trustworthy and reliable intelligent systems. Her current research is centered around trustworthy NLP, with a focus on interpretability, robustness, and fairness, to support the understanding and interplay between humans and neural language models.

Presentations

REV: Information-Theoretic Evaluation of Free-Text Rationales

Hanjie Chen

Improving Interpretability via Explicit Word Interaction Graph Layer

Arshdeep Sekhon and 5 other authors

Identifying the Source of Vulnerability in Explanation Discrepancy: A Case Study in Neural Text Classification

Ruixuan Tang and 2 other authors

Adversarial Training for Improving Model Robustness? Look at Both Prediction and Interpretation

Hanjie Chen and 1 other author

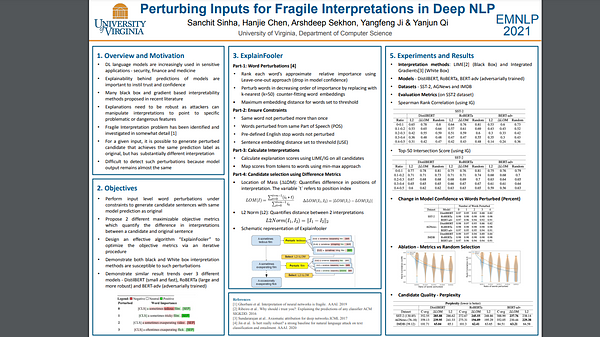

Perturbing Inputs for Fragile Interpretations in Deep Natural Language Processing

Sanchit Sinha and 4 other authors

Explaining Neural Network Predictions on Sentence Pairs via Learning Word-Group Masks

Hanjie Chen and 6 other authors