Aman Madaan

Graduate student @ CMU

generation

graph generation

language generation

reasoning

commonsense reasoning

gpt-3

information retrieval

survey

analysis

large language models

text generation

code generation

prompting

seq2seq

language models

13

presentations

10

number of views

SHORT BIO

Aman is a PhD candidate at Carnegie Mellon University. His current focus is improving the reasoning capabilities of large language models, focusing on leveraging feedback and the synergy between code generation and natural language reasoning.

Presentations

Program-Aided Reasoners (Better) Know What They Know

Anubha Kabra and 5 other authors

Bridging the Gap: A Survey on Integrating (Human) Feedback for Natural Language Generation | VIDEO

Patrick Fernandes and 10 other authors

What Makes Chain-of-Thought Prompting Effective? A Counterfactual Study

Aman Madaan and 2 other authors

Language Models of Code are Few-Shot Commonsense Learners

Aman Madaan and 1 other author

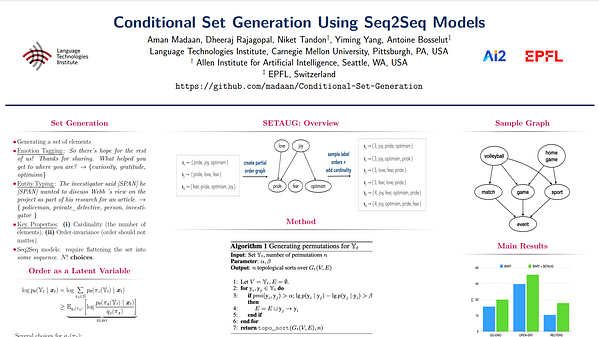

Conditional set generation using Seq2seq models

Aman Madaan

MemPrompt: Memory-assisted Prompt Editing with User Feedback

Aman Madaan

Learning to repair: Repairing model output errors after deployment using a dynamic memory of feedback

Niket Tandon and 3 other authors

GUD-IR: Generative Retrieval for Semiparametric Models

Niket Tandon and 3 other authors

CURIE: An Iterative Querying Approach for Reasoning About Situations

Dheeraj Rajagopal and 7 other authors

Memory-assisted prompt editing to improve GPT-3 after deployment

Niket Tandon and 3 other authors

Think about it! Improving defeasible reasoning by first modeling the question scenario.

Aman Madaan

Could you give me a hint ? Generating inference graphs for defeasible reasoning

Aman Madaan and 4 other authors

Neural Language Modeling for Contextualized Temporal Graph Generation

Aman Madaan and 1 other author