Dan Jurafsky

Professor @ Stanford University

computational social science

validity

dialogue

large language models

hallucination

training data frequency

nlp

summarization

multilinguality

bias

low-resource nlp

education

framing

text classification

misinformation

23

presentations

23

number of views

SHORT BIO

Dan Jurafsky is Professor of Linguistics, Professor of Computer Science, and Jackson Eli Reynolds Professor in Humanities at Stanford University.

He is the recipient of a 2002 MacArthur Fellowship, a member of the American Academy of Arts and Sciences, and a fellow of the Association for Computational Linguistics, the Linguistics Society of America, and the American Association for the Advancement of Science. Dan is the co-author with Jim Martin of the widely-used textbook "Speech and Language Processing", and co-created with Chris Manning the first massively open online course in Natural Language Processing. His trade book "The Language of Food: A Linguist Reads the Menu" was a finalist for the 2015 James Beard Award. His research ranges widely across NLP as well as its applications to the behavioral and social sciences.

Presentations

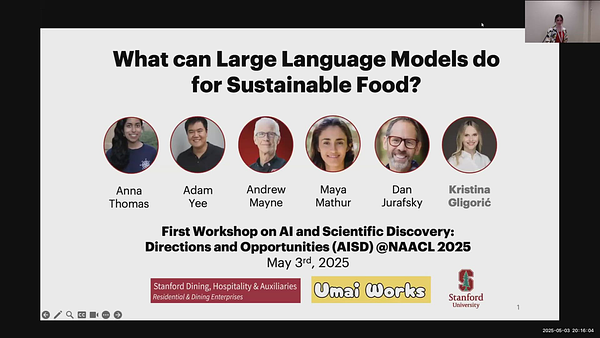

What Can Large Language Models Do for Sustainable Food?

Kristina Gligoric and 5 other authors

Can Unconfident LLM Annotations Be Used for Confident Conclusions?

Kristina Gligoric and 4 other authors

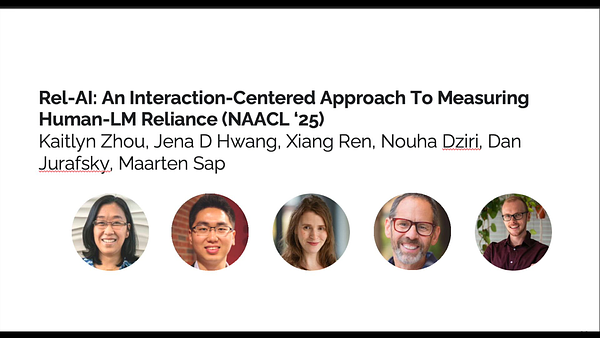

REL-A.I.: An Interaction-Centered Approach To Measuring Human-LM Reliance

Kaitlyn Zhou and 5 other authors

Rethinking Word Similarity: Semantic Similarity through Classification Confusion

Kaitlyn Zhou and 5 other authors

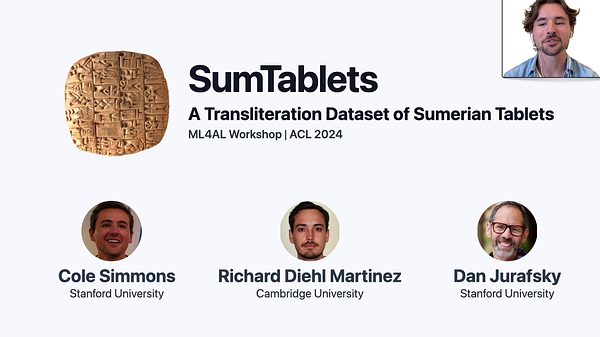

SumTablets: A Transliteration Dataset of Sumerian Tablets

Cole Simmons and 2 other authors

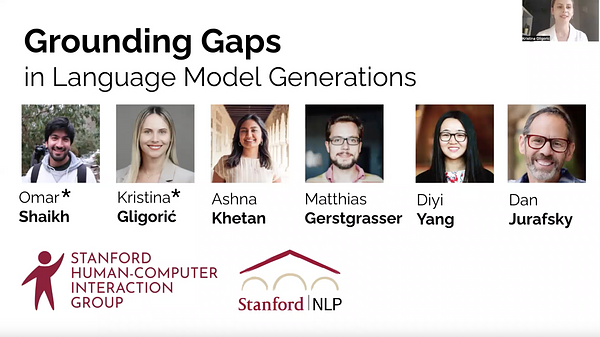

Grounding Gaps in Language Model Generations

Omar Shaikh and 5 other authors

NLP Systems That Can’t Tell Use from Mention Censor Counterspeech, but Teaching the Distinction Helps

Kristina Gligoric and 4 other authors

AnthroScore: A Computational Linguistic Measure of Anthropomorphism

Myra Cheng and 3 other authors

Navigating the Grey Area: How Expressions of Uncertainty and Overconfidence Affect Language Models | VIDEO

Kaitlyn Zhou and 2 other authors

Making More of Little Data: Improving Low-Resource Automatic Speech Recognition Using Data Augmentation

Martijn Bartelds and 4 other authors

Marked Personas: Using Natural Language Prompts to Measure Stereotypes in Language Models

Myra Cheng and 2 other authors

Multilingual BERT has an accent: Evaluating English influences on fluency in multilingual models

Isabel Papadimitriou and 2 other authors

Mini But Mighty: Efficient Multilingual Pretraining with Linguistically-Informed Data Selection

Tolulope Ogunremi and 2 other authors

When Do Pre-Training Biases Propagate to Downstream Tasks? A Case Study in Text Summarization

Faisal Ladhak and 6 other authors

Multilingual BERT has an accent: Evaluating English influences on fluency in multilingual models

Isabel Papadimitriou and 2 other authors

Mini But Mighty: Efficient Multilingual Pretraining with Linguistically-Informed Data Selection

Tolulope Ogunremi and 2 other authors