Dirk Hovy

Professor @ Bocconi University

bias

hate speech

language models

large language models

evaluation

llms

gender

safety

zero-shot learning

survey

domain adaptation

pretrained language model

multilinguality

language modeling

regularization

35

presentations

63

number of views

SHORT BIO

Dirk Hovy is an Associate Professor in the Computing Sciences Department of Bocconi University, and the scientific director of the Data and Marketing Insights research unit. Dirk is interested in the interaction between language, society, and machine learning, or what language can tell us about society, and what computers can tell us about language. He is also interested in ethical questions of bias and algorithmic fairness in machine learning.

Presentations

Divine LLaMAs: Bias, Stereotypes, Stigmatization, and Emotion Representation of Religion in Large Language Models

Flor Miriam Plaza-del-Arco and 4 other authors

Twists, Humps, and Pebbles: Multilingual Speech Recognition Models Exhibit Gender Performance Gaps

Giuseppe Attanasio and 3 other authors

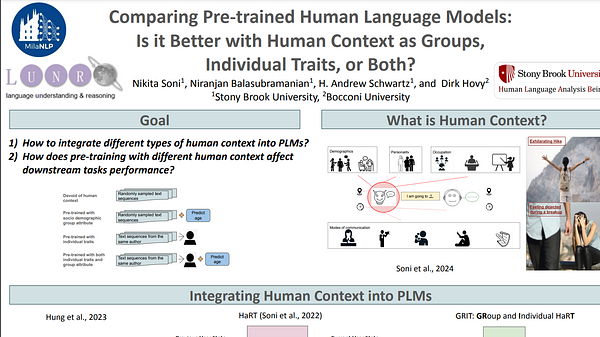

Comparing Pre-trained Human Language Models: Is it Better with Human Context as Groups, Individual Traits, or Both?

Nikita Soni and 3 other authors

My Answer is C: First-Token Probabilities Do Not Match Text Answers in Instruction-Tuned Language Models

Xinpeng Wang and 7 other authors

"My Answer is C": First-Token Probabilities Do Not Match Text Answers in Instruction-Tuned Language Models

Xinpeng Wang and 7 other authors

Angry Men, Sad Women: Large Language Models Reflect Gendered Stereotypes in Emotion Attribution

Flor Miriam Plaza-del-Arco and 4 other authors

Classist Tools: Social Class Correlates with Performance in NLP

Amanda Cercas Curry and 3 other authors

Narratives at Conflict: Computational Analysis of News Framing in Multilingual Disinformation Campaigns

Antonina Sinelnik and 1 other author

Compromesso! Italian Many-Shot Jailbreaks undermine the safety of Large Language Models

Fabio Pernisi and 2 other authors

XSTest: A Test Suite for Identifying Exaggerated Safety Behaviours in Large Language Models

Paul Röttger and 5 other authors

Explaining Speech Classification Models via Word-Level Audio Segments and Paralinguistic Features

Eliana Pastor and 4 other authors

MilaNLP at SemEval-2023 Task 10: Ensembling Domain-Adapted and Regularized Pretrained Language Models for Robust Sexism Detection

Amanda Cercas Curry and 3 other authors

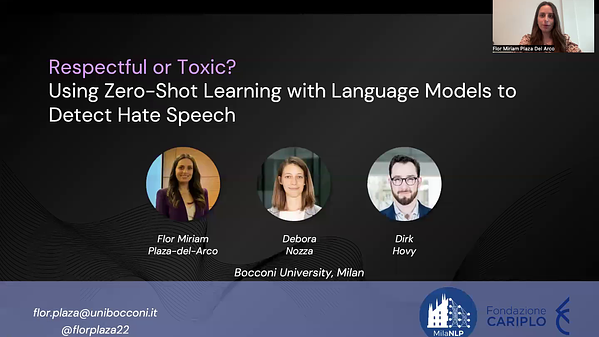

Respectful or Toxic? Using Zero-Shot Learning with Language Models to Detect Hate Speech

Flor Miriam Plaza-del-Arco and 2 other authors

The State of Profanity Obfuscation in Natural Language Processing Scientific Publications

Debora Nozza and 1 other author

Temporal and Second Language Influence on Intra-Annotator Agreement and Stability in Hate Speech Labelling

Gavin Abercrombie and 2 other authors

The State of Profanity Obfuscation in Natural Language Processing Scientific Publications

Debora Nozza and 1 other author