Trapit Bansal

University of Massachusets Amherst

pre-trained language model

meta-learning

few-shot learning

entity linking

entity typing

knowledge-informed representation

out-of-distribution generalization

2

presentations

SHORT BIO

Trapit is a Ph.D. student at UMass Amherst. His research focuses on learning NLP models from limited human-labeled data, concentrating on self-supervised learning, multi-task learning, and meta-learning for learning generalizable models. In the past, he has also worked on distant supervision methods for various applications in knowledge extraction, methods for knowledge representation and reasoning, and reinforcement learning for multi-agent systems.

Presentations

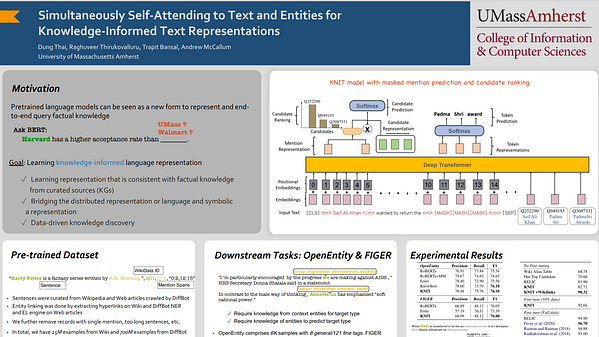

Simultaneously Self-Attending to Text and Entities forKnowledge-Informed Text Representations

Dung Thai and 3 other authors

Learning to Few-Shot Learn Across Diverse Natural Language Classification Tasks

Trapit Bansal