Yejin Choi

Allen Institute for AI

language models

amr

semantics

misinformation

commonsense

common sense

computational social science

pretrained language models

large language models

news

disinformation

fake news detection

politics

abusive language

images

12

presentations

48

number of views

Presentations

You Are An Expert Linguistic Annotator: Limits of LLMs as Analyzers of Abstract Meaning Representation

Valentina Pyatkin and 4 other authors

From Dogwhistles to Bullhorns: Unveiling Coded Rhetoric with Language Models

Julia Mendelsohn and 3 other authors

Faking Fake News for Real Fake News Detection: Propaganda-Loaded Training Data Generation

Kung-Hsiang Huang and 4 other authors

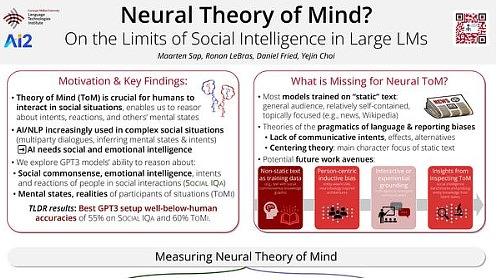

Neural Theory-of-Mind? On the Limits of Social Intelligence in Large LMs

Maarten Sap and 3 other authors

Reframing Human-AI Collaboration for Generating Free-Text Explanations

Sarah Wiegreffe and 4 other authors

Knowledge is Power: Symbolic Knowledge Distillation and Commonsense Morality

Yejin Choi

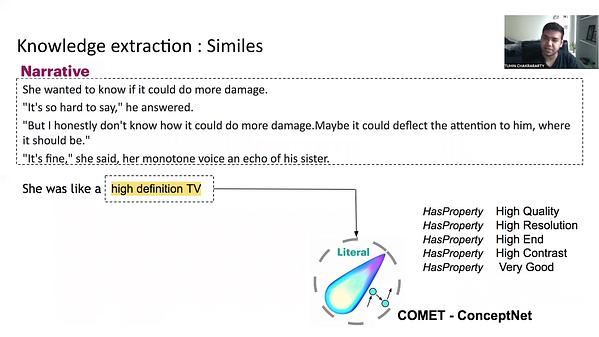

Interpreting Figurative Language in Narratives

Tuhin Chakrabarty and 2 other authors

Contrastive Explanations for Model Interpretability

Alon Jacovi and 5 other authors

Moral Stories: Situated Reasoning about Norms, Intents, Actions, and their Consequences

Denis Emelin and 3 other authors

Contrastive Explanations for Model Interpretability

Alon Jacovi and 5 other authors

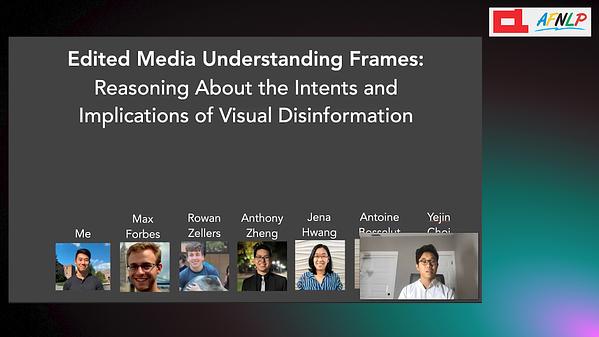

Edited Media Understanding Frames: Reasoning About the Intent and Implications of Visual Misinformation

Jeff Da and 6 other authors

Do Neural Language Models Overcome Reporting Bias?

Vered Shwartz and 1 other author