Luke Zettlemoyer

Professor @ University of Washington

few-shot learning

prompting

multilingual

large language models

in-context learning

interpretation

language modeling

pretraining

llm

cross-lingual

language model

evaluation

analysis

dataset

translate-test

32

presentations

82

number of views

SHORT BIO

Luke Zettlemoyer is a Professor in the Paul G. Allen School of Computer Science & Engineering at the University of Washington, and a Senior Research Director at Meta. His research focuses on empirical methods for natural language semantics, and involves designing machine learning algorithms, introducing new tasks and datasets, and, most recently, studying how to best develop new architectures and self-supervision signals for pre-training. His honors include being elected ACL President, named an ACL Fellow, winning a PECASE award, an Allen Distinguished Investigator award, and multiple best paper awards. Luke was an undergrad at NC State, received his PhD from MIT and was a postdoc at the University of Edinburgh.

Presentations

Does Liking Yellow Imply Driving a School Bus? Semantic Leakage in Language Models

Hila Gonen and 4 other authors

CopyBench: Measuring Literal and Non-Literal Reproduction of Copyright-Protected Text in Language Model Generation

Tong Chen and 8 other authors

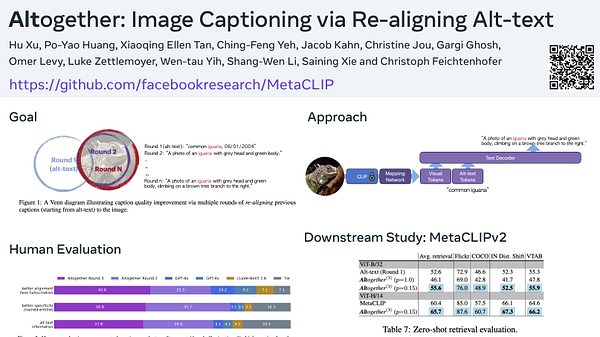

Altogether: Image Captioning via Re-aligning Alt-text

Hu Xu and 12 other authors

Breaking the Curse of Multilinguality with Cross-lingual Expert Language Models

Terra Blevins and 6 other authors

The Belebele Benchmark: a Parallel Reading Comprehension Dataset in 122 Language Variants

Lucas Bandarkar and 9 other authors

MYTE: Morphology-Driven Byte Encoding for Better and Fairer Multilingual Language Modeling

Tomasz Limisiewicz and 4 other authors

Translate to Disambiguate: Zero-shot Multilingual Word Sense Disambiguation with Pretrained Language Models

Haoqiang Kang and 2 other authors

Revisiting Machine Translation for Cross-lingual Classification

Mikel Artetxe and 4 other authors

Demystifying Prompts in Language Models via Perplexity Estimation

Hila Gonen and 4 other authors

FActScore: Fine-grained Atomic Evaluation of Factual Precision in Long Form Text Generation

Sewon Min and 8 other authors

XLM-V: Overcoming the Vocabulary Bottleneck in Multilingual Masked Language Models | VIDEO

Davis Liang and 7 other authors

Demystifying Prompts in Language Models via Perplexity Estimation

Hila Gonen and 4 other authors

Towards Understanding Chain-of-Thought Prompting: An Empirical Study of What Matters

Boshi Wang and 6 other authors

Z-ICL: Zero-Shot In-Context Learning with Pseudo-Demonstrations

Xinxi Lyu and 4 other authors

CREPE: Open-Domain Question Answering with False Presuppositions

Xinyan Velocity Yu and 3 other authors

Prompting Language Models for Linguistic Structure

Terra Blevins and 2 other authors