Hongru WANG

large language models

readability

dialog systems

dialogue system

prompting

graph modeling

prompt

recommender system

knowledge probing

multiple knowledge sources

retrieval-based augmentation

retrieval-free knowlege-grounded dialog

simulated dialogs

personalized dialogue system

llm safety

10

presentations

SHORT BIO

Hongru is currently a third-year Ph.D. Candidate at The Chinese University of Hong Kong, supervised by Prof. Kam-Fai WONG. He obtained his Bachelor's degree and Master's degree from the Communication University of China and The Chinese University of Hong Kong in 2019 and 2020, respectively. He has publishes over 10 papers at top international conferences, including ACL, EMNLP, COLING, ICASSP. His research interests include Dialogue Systems, RLAIF, Tool Learning and Large Language Models.

Presentations

Large Language Models as Source Planner for Personalized Knowledge-grounded Dialogues

Hongru WANG

SELF-GUARD: Empower the LLM to Safeguard Itself

Zezhong WANG and 7 other authors

Enhancing Large Language Models Against Inductive Instructions with Dual-critique Prompting

Rui Wang and 6 other authors

Cue-CoT: Chain-of-thought Prompting for Responding to In-depth Dialogue Questions with LLMs

Hongru WANG and 7 other authors

Large Language Models as Source Planner for Personalized Knowledge-grounded Dialogues

Hongru WANG and 9 other authors

Improving Factual Consistency for Knowledge-Grounded Dialogue Systems via Knowledge Enhancement and Alignment

Boyang XUE and 9 other authors

ReadPrompt: A Readable Prompting Method for Reliable Knowledge Probing

Zezhong WANG and 5 other authors

MCML: A Novel Memory-based Contrastive Meta-Learning Method for Few Shot Slot Tagging

Hongru WANG and 3 other authors

Retrieval-free Knowledge Injection through Multi-Document Traversal for Dialogue Models

Rui Wang and 9 other authors

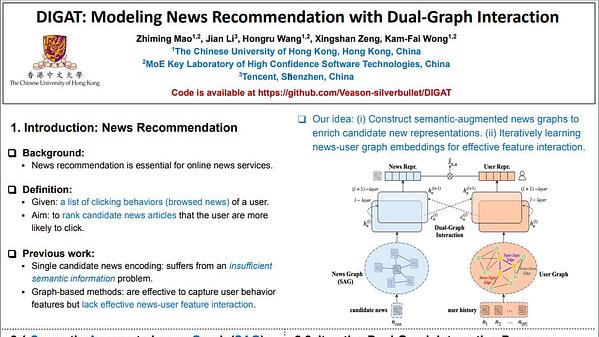

DIGAT: Modeling News Recommendation with Dual-Graph Interaction

Zhiming Mao and 4 other authors