Ranjay Krishna

University of Washington, Computer Science & Engineering, Seattle, United States

knowledge distillation

distilling step-by-step

chain-of-thought reasonings

artificial intelligence

multi-agents

watermark

retrieval-augmented generation

large language model

long context

chain-of-thought reasoning

natural language rationales

llm-based agents

lost-in-the-middle

positional attention bias

attention calibration

7

presentations

Presentations

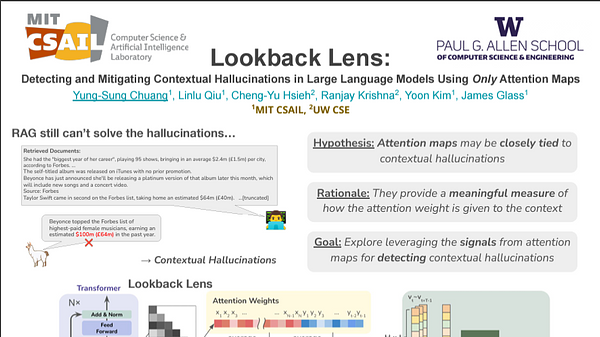

Lookback Lens: Detecting and Mitigating Contextual Hallucinations in Large Language Models Using Only Attention Maps

Yung-Sung Chuang and 5 other authors

Is C4 Dataset Enough for Pruning? An Investigation of Calibration Data for LLM Pruning

Abhinav Bandari and 7 other authors

ImageInWords: Unlocking Hyper-Detailed Image Descriptions

Roopal Garg and 9 other authors

Found in the middle: Calibrating Positional Attention Bias Improves Long Context Utilization

Cheng-Yu Hsieh and 10 other authors

Distilling Step-by-Step! Outperforming Larger Language Models with Less Training Data and Smaller Model Sizes

Cheng-Yu Hsieh and 8 other authors

Distilling Step-by-Step! Outperforming Larger Language Models with Less Training Data and Smaller Model Sizes

Cheng-Yu Hsieh and 8 other authors

Distilling Step-by-Step! Outperforming Larger Language Models with Less Training Data and Smaller Model Sizes

Cheng-Yu Hsieh and 8 other authors