Cheng-Yu Hsieh

knowledge distillation

distilling step-by-step

chain-of-thought reasonings

retrieval-augmented generation

large language model

long context

chain-of-thought reasoning

natural language rationales

lost-in-the-middle

positional attention bias

attention calibration

multi-objective alignment

alignment tax

secure alignment

adversarial attack

6

presentations

SHORT BIO

I am a PhD student at the University of Washington. I am broadely interested in data-centric machine learning, with the vision to enable adoption of machine learning in a wide spectrum of practical applications. I develop techniques and tools that (1) help users efficiently create training datasets, (2) enrich dataset information to empower models without parameter scaling, and (3) communicate model behavior via data, to reliably deploy ML in high-stakes scenarios.

Presentations

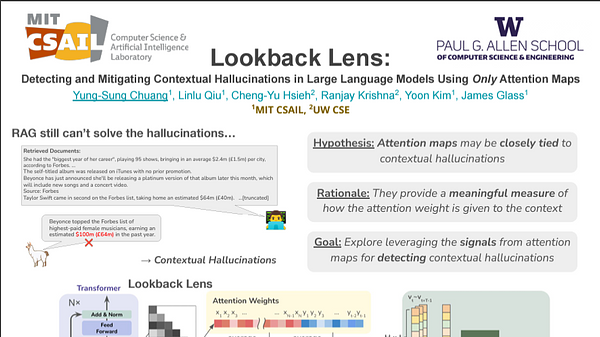

Lookback Lens: Detecting and Mitigating Contextual Hallucinations in Large Language Models Using Only Attention Maps

Yung-Sung Chuang and 5 other authors

Is C4 Dataset Enough for Pruning? An Investigation of Calibration Data for LLM Pruning

Abhinav Bandari and 7 other authors

Found in the middle: Calibrating Positional Attention Bias Improves Long Context Utilization

Cheng-Yu Hsieh and 10 other authors

Distilling Step-by-Step! Outperforming Larger Language Models with Less Training Data and Smaller Model Sizes

Cheng-Yu Hsieh and 8 other authors

Distilling Step-by-Step! Outperforming Larger Language Models with Less Training Data and Smaller Model Sizes

Cheng-Yu Hsieh and 8 other authors

Distilling Step-by-Step! Outperforming Larger Language Models with Less Training Data and Smaller Model Sizes

Cheng-Yu Hsieh and 8 other authors