Bailin Wang

large language models

robustness

analysis

evaluation

generalization

reasoning

compositional generalization

parsing

generalizability

semantic parsing

memorization

domain generalization

decoding

sequence-to-sequence model

in-context learning

5

presentations

SHORT BIO

Bailin Wang is a postdoc associate at MIT. His current research aims at building structured models for natural language understanding, improving generalization across data distributions.

Presentations

Learning to Decode Collaboratively with Multiple Language Models

Zejiang Shen and 4 other authors

Iterative Forward Tuning Boosts In-Context Learning in Language Models

Jiaxi Yang and 7 other authors

Reasoning or Reciting? Exploring the Capabilities and Limitations of Language Models Through Counterfactual Tasks

Zhaofeng Wu and 8 other authors

Improving Generalization in Language Model-based Text-to-SQL Semantic Parsing: Two Simple Semantic Boundary-based Techniques

Daking Rai and 3 other authors

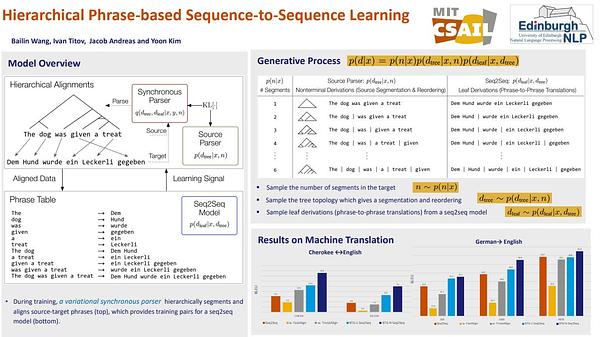

Hierarchical Phrase-Based Sequence-to-Sequence Learning

Bailin Wang and 3 other authors