Adina Williams

language models

natural language understanding

robustness

evaluation

fairness

retrieval

large language models

social bias

datasets

fallacies

meta-evaluation

in-context learning

named entities

acceptability judgements

language-models

8

presentations

27

number of views

SHORT BIO

Adina is a Research Scientist on the Fundamental AI Research (FAIR) team at Meta Platforms, Inc. in NYC. Her research spans several topics in NLP and computational linguistics, with a focus on dataset creation and model evaluation for humanlikeness, fairness, generalization and robustness.

Presentations

Evaluation after the LM boom: Frustrations, fallacies andthe future

Adina Williams

Grammatical Genders Influence on Distributional Semantics: A Causal Perspective

Karolina Stanczak and 4 other authors

Robustness of Named-Entity Replacements for In-Context Learning

Dennis Minn and 8 other authors

Evaluation after the LLM boom: frustrations, fallacies, and the future | VIDEO

Adina Williams

The first workshop on generalisation (benchmarking) in NLP Panel

Tatsunori Hashimoto and 2 other authors

Language model acceptability judgements are not always robust to context

Koustuv Sinha and 6 other authors

Perturbation Augmentation for Fairer NLP

Rebecca Qian and 5 other authors

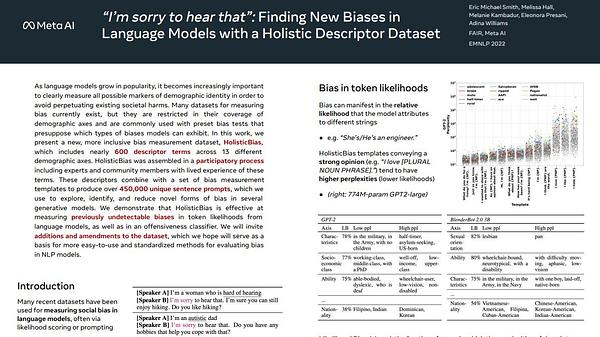

"I'm sorry to hear that": Finding New Biases in Language Models with a Holistic Descriptor Dataset

Eric Smith and 4 other authors