Jongwoo Ko

Graduate student @ KAIST

ml: representation learning

reasoning

cross-lingual

large language models

logical reasoning

contrastive learning

overfitting

mixup

inference acceleration

machine learning (ml)

ml: classification and regression

ml: deep neural network algorithms

cv: representation learning for vision

ml: unsupervised & self-supervised learning

math qa

6

presentations

SHORT BIO

I am a Ph.D. student of the KAIST AI and a member of OSI Lab (Advisor: Se-young Yun, KAIST). My current research focuses on efficient Transformer models, particularly generative language models like T5 or LLaMA. Additionally, I am also interested in efficient Vision Transformer models or multi-modal models. I aim to enhance the efficiency of large Transformer models.

Until last year, my research interests revolved around developing new algorithms to address real-world challenges in the machine learning pipeline, such as noise label and class imbalance settings, while providing statistical or mathematical guarantees. I received a master's degree in the Department of Industrial and Systems Engineering from KAIST under the supervision of Prof. Heeyoung Kim.

Presentations

Towards Difficulty-Agnostic Efficient Transfer Learning for Vision-Language Models

Yongjin Yang and 2 other authors

Fast and Robust Early-Exiting Framework for Autoregressive Language Models with Synchronized Parallel Decoding

Sangmin Bae and 3 other authors

Revisiting Intermediate Layer Distillation for Compressing Language Models: An Overfitting Perspective

Jongwoo Ko

A Gift from Label Smoothing: Robust Training with Adaptive Label Smoothing via Auxiliary Classifier under Label Noise

Jongwoo Ko and 2 other authors

Self-Contrastive Learning: Single-viewed Supervised Contrastive Framework using Sub-network

Sangmin Bae and 5 other authors

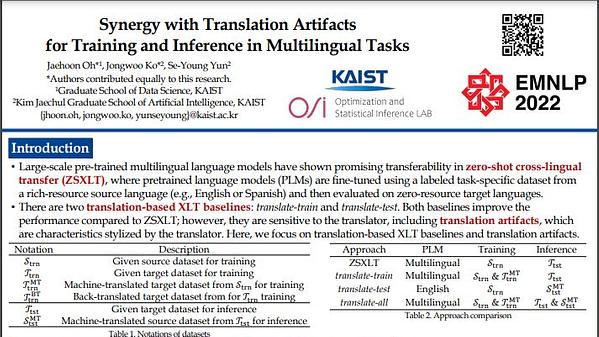

Synergy with Translation Artifacts for Training and Inference in Multilingual Tasks

Jaehoon Oh and 1 other author