Vladislav Lialin

Graduate student @ University of Massachusetts Lowell

scaling laws

pre-training

small models

glue

masked language model

scale

limited vocabulary

simplified language

power laws

compute optimality

model analysis

cost-effectiveness

probing

roberta

3

presentations

SHORT BIO

I am a computer science PhD student at University of Massachusetts Lowell advised by Anna Rumshisky. My research areas areas include continual learning for large language models, multimodal learning and model analysis. I think that every task is a language modeling task if you try hard enough.

Presentations

Honey, I Shrunk the Language: Language Model Behavior at Reduced Scale.

Vijeta Deshpande and 4 other authors

Honey, I Shrunk the Language: Language Model Behavior at Reduced Scale.

Vijeta Deshpande and 4 other authors

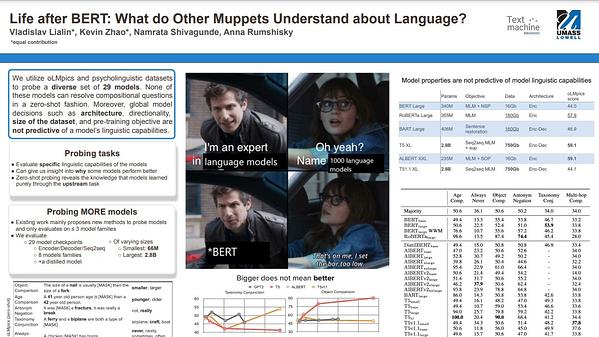

Life after BERT: What do Other Muppets Understand about Language?

Vladislav Lialin