Yuning Mao

University of Illinois Urbana-Champaign

prompt tuning

language model

robustness

transformers

low-resource summarization

distant supervision

nlp

text classification

weak supervision

vocabulary

multilingual

optimization

large language models

attention

transfer learning

9

presentations

12

number of views

SHORT BIO

Yuning Mao is a research scientist at Meta AI. He received his Ph.D degree in Computer Science from University of Illinois at Urbana–Champaign (UIUC) in 2022. His research goal is to help humans to acquire information and knowledge from unstructured content more effectively and efficiently. He has been working on a range of topics towards this goal, such as text summarization, question answering, pre-trained language models, taxonomy construction, and recommendation.

Presentations

MPT: Multimodal Prompt Tuning for Zero-shot Instruction Learning

Taowen Wang and 13 other authors

MART: Improving LLM Safety with Multi-round Automatic Red-Teaming

Suyu Ge and 7 other authors

APrompt: Attention Prompt Tuning for Efficient Adaptation of Pre-trained Language Models | VIDEO

Qifan Wang and 10 other authors

RoAST: Robustifying Language Models via Adversarial Perturbation with Selective Training

Jaehyung Kim and 9 other authors

XLM-V: Overcoming the Vocabulary Bottleneck in Multilingual Masked Language Models | VIDEO

Davis Liang and 7 other authors

Residual Prompt Tuning: improving prompt tuning with residual reparameterization

Anastasia Razdaibiedina and 6 other authors

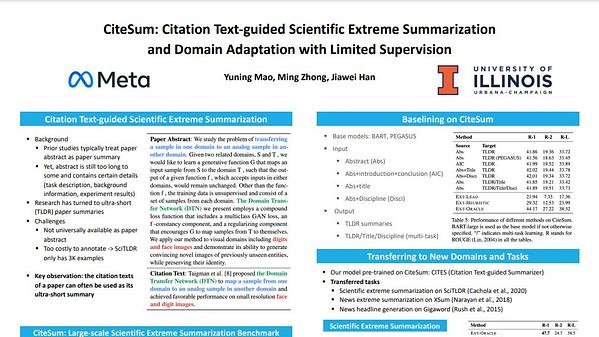

CiteSum: Citation Text-guided Scientific Extreme Summarization and Domain Adaptation with Limited Supervision

Yuning Mao and 2 other authors

Eider: Empowering Document-level Relation Extraction with Efficient Evidence Extraction and Inference-stage Fusion

Yiqing Xie and 4 other authors

UniPELT: A Unified Framework for Parameter-Efficient Language Model Tuning

Yuning Mao and 7 other authors