Clara Meister

information theory

cognitive modeling

decoding

mutual information

large language models

multimodal retrieval

regularization

reading

document understanding

statistics

entropy

uniform information density

replication

question-answering

framenet

12

presentations

10

number of views

Presentations

Towards a Semantically-aware Surprisal Theory

Clara Meister and 2 other authors

How to Compute the Probability of a Word

Tiago Pimentel and 1 other author

The Role of n-gram Smoothing in the Age of Neural Networks

Luca Malagutti and 5 other authors

Revisiting the Optimality of Word Lengths | VIDEO

Tiago Pimentel and 4 other authors

Language Model Quality Correlates with Psychometric Predictive Power in Multiple Languages

Cui Ding and 3 other authors

Testing the Predictions of Surprisal Theory in 11 Languages

Cui Ding and 4 other authors

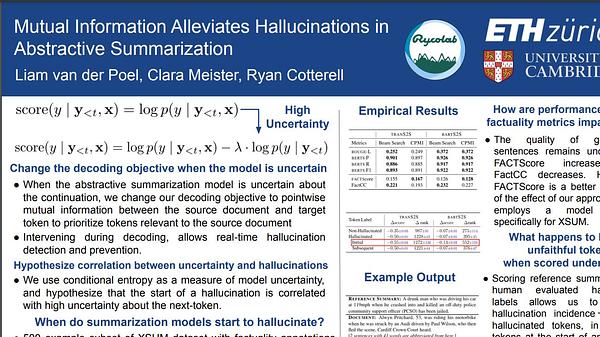

Mutual Information Alleviates Hallucinations in Abstractive Summarization

Liam van der Poel and 2 other authors

Estimating the Entropy of Linguistic Distributions

Aryaman Arora and 2 other authors

High probability or low information? The probability–quality paradox in language generation

Clara Meister and 3 other authors

Analyzing Wrap-Up Effects through an Information-Theoretic Lens

Clara Meister and 4 other authors

A Plug-and-Play Method for Controlled Text Generation

Damian Pascual Ortiz and 4 other authors

A Plug-and-Play Method for Controlled Text Generation

Damian Pascual Ortiz and 4 other authors