Chenglong Wang

Post-graduate student @ Northeastern University

reinforcement learning

knowledge distillation

glue

sequence generation

large language model

actor-critic

dynamic sampling

instruction tuning

evol instruct

5

presentations

1

number of views

SHORT BIO

I am currently a third-year Ph.D. student at the Natural Language Processing Laboratory, Department of Computer Science and Technology, Northeastern University, China, under the guidance of Professor Tong Xiao and Professor Jingbo Zhu.

I completed my undergraduate studies at Northeastern University and obtained my bachelor's degree in Computer Science and Technology. Subsequently, I pursued my master's degree, also at Northeastern University, graduating in 2020.

My research interests focus on modeling methods for deep reinforcement learning on sequence generation tasks, with a particular emphasis on two key areas: 1) enhancing the training efficiency of sequence generation models based on reinforcement learning, and 2) constructing and training reward models for deep reinforcement learning.

Presentations

RoVRM: A Robust Visual Reward Model Optimized via Auxiliary Textual Preference Data

Yang Gan and 9 other authors

Revealing the Parallel Multilingual Learning within Large Language Models

Yongyu Mu and 10 other authors

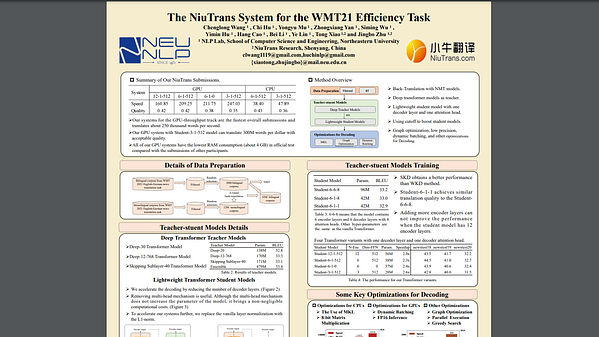

The NiuTrans System for the WMT 2021 Efficiency Task

Chenglong Wang

Improved Knowledge Distillation for Pre-trained Language Models via Knowledge Selection

Chenglong Wang

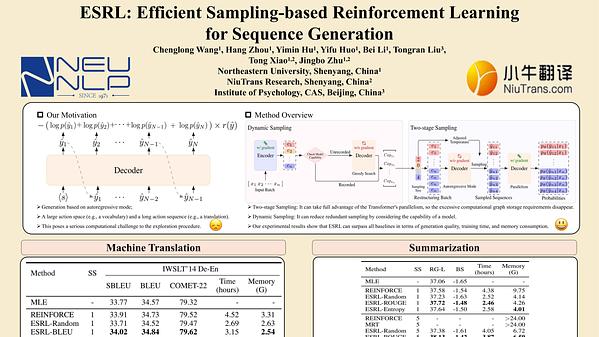

ESRL: Efficient Sampling-Based Reinforcement Learning for Sequence Generation

Chenglong Wang and 7 other authors