Christopher Manning

low-resource nlp

multilinguality

multilingual

generalization

cross-lingual transfer

education

nli

parsing

interpretability

domain adaptation

xlm-roberta

natural language inference

consistency

disinformation

transfer learning

16

presentations

123

number of views

1

citations

SHORT BIO

Christopher Manning is the inaugural Thomas M. Siebel Professor in Machine Learning in the Departments of Linguistics and Computer Science at Stanford University, Director of the Stanford Artificial Intelligence Laboratory (SAIL), and an Associate Director of the Stanford Institute for Human-Centered Artificial Intelligence (HAI). His research goal is computers that can intelligently process, understand, and generate human languages. Manning was an early leader in applying Deep Learning to Natural Language Processing (NLP), with well-known research on the GloVe model of word vectors, attention, machine translation, question answering, self-supervised model pre-training, tree-recursive neural networks, machine reasoning, dependency parsing, sentiment analysis, and summarization. He also focuses on computational linguistic approaches to parsing, natural language inference and multilingual language processing, including being a principal developer of Stanford Dependencies and Universal Dependencies. Manning has coauthored leading textbooks on statistical approaches to NLP (Manning and Schütze 1999) and information retrieval (Manning, Raghavan, and Schütze, 2008), as well as linguistic monographs on ergativity and complex predicates. His online CS224N Natural Language Processing with Deep Learning videos have been watched by hundreds of thousands of people. He is an ACM Fellow, a AAAI Fellow, and an ACL Fellow, and a Past President of the ACL (2015). His research has won ACL, Coling, EMNLP, and CHI Best Paper Awards, and an ACL Test of Time Award. He has a B.A. (Hons) from The Australian National University and a Ph.D. from Stanford in 1994, and an Honorary Doctorate from U. Amsterdam in 2023, and he held faculty positions at Carnegie Mellon University and the University of Sydney before returning to Stanford. He is the founder of the Stanford NLP group (@stanfordnlp) and manages development of the Stanford CoreNLP and Stanza software.

Presentations

MSCAW-coref: Multilingual, Singleton and Conjunction-Aware Word-Level Coreference Resolution

Houjun Liu and 4 other authors

Statistical Uncertainty in Word Embeddings: GloVe-V

Andrea Vallebueno and 3 other authors

Predicting Narratives of Climate Obstruction in Social Media Advertising

Harri Rowlands and 3 other authors

pyvene: A Library for Understanding and Improving PyTorch Models via Interventions

Zhengxuan Wu and 7 other authors

Panel: Implications of LLMs

Subbarao Kambhampati and 4 other authors

Academic NLP research in the Age of LLMs: Nothing but blue skies!

Christopher Manning

Mini But Mighty: Efficient Multilingual Pretraining with Linguistically-Informed Data Selection

Tolulope Ogunremi and 2 other authors

Mini But Mighty: Efficient Multilingual Pretraining with Linguistically-Informed Data Selection

Tolulope Ogunremi and 2 other authors

Enhancing Self-Consistency and Performance of Pre-Trained Language Models through Natural Language Inference

Eric Mitchell and 7 other authors

JamPatoisNLI: A Jamaican Patois Natural Language Inference Dataset

Ruth-Ann Armstrong and 2 other authors

The Place of Linguistics and Symbolic Structures

Chitta Baral and 4 other authors

Synthetic Disinformation Attacks on Automated Fact Verification Systems

Yibing Du and 2 other authors

Answering Open-Domain Questions of Varying Reasoning Steps from Text

Peng Qi and 3 other authors

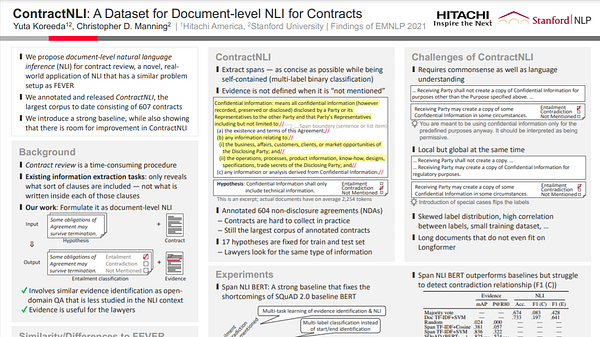

ContractNLI: A Dataset for Document-level Natural Language Inference for Contracts

Yuta Koreeda and 1 other author

ContractNLI: A Dataset for Document-level Natural Language Inference for Contracts

Yuta Koreeda and 1 other author

ContractNLI: A Dataset for Document-level Natural Language Inference for Contracts

Yuta Koreeda and 1 other author