5

presentations

2

number of views

SHORT BIO

Jingfeng Yang is an Applied Scientist at Amazon Search. He received M.S. in Computer Science at Georgia Tech. He was also a research intern at Amazon, Google and Microsoft Research. He has a broad interest in Natural Language Processing and Machine Learning. His research goal is to 1) solve the data scarcity problem, 2) improve the generalizability of models, and 3) design controllable and interpretable models, by 1) using unlabeled, out-of-domain or augmented data, 2) incorporating external knowledge or inductive biases (e.g. intermediate abstractions, model architecture biases etc.) into models, and 3) large-scale pretraining. He is trying to achieve this goal in 1) text generation, 2) semantic parsing / question answering, 3) multilingual NLP, and 4) other Natural Languge/Multimoldality understanding tasks.

Presentations

SEQZERO: Few-shot Compositional Semantic Parsing with Sequential Prompts and Zero-shot Models

Jingfeng Yang and 5 other authors

SUBS: Subtree Substitution for Compositional Semantic Parsing

Jingfeng Yang and 2 other authors

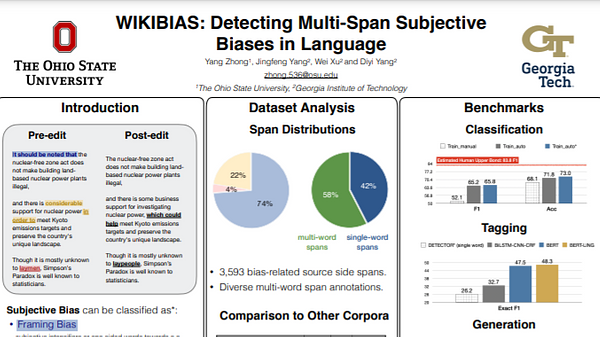

WIKIBIAS: Detecting Multi-Span Subjective Biases in Language

Yang Zhong and 3 other authors

Frustratingly Simple but Surprisingly Strong: Using Language-Independent Features for Zero-shot Cross-lingual Semantic Parsing

Jingfeng Yang and 3 other authors

WIKIBIAS: Detecting Multi-Span Subjective Biases in Language

Yang Zhong and 3 other authors