Arya D. McCarthy

Johns Hopkins University

low-resource

multilingual

domain adaptation

neural machine translation

low resource languages

opinion

low-resource languages

indigenous

computational morphology

word alignment

unseen

language adaptation

meta-analysis

unsupervised morphology

pre-trained multilingual sequence-to-sequence models

8

presentations

7

number of views

SHORT BIO

PhD student at Johns Hopkins University.

Presentations

Meeting the Needs of Low-Resource Languages: The Value of Automatic Alignments via Pretrained Models

Abteen Ebrahimi and 7 other authors

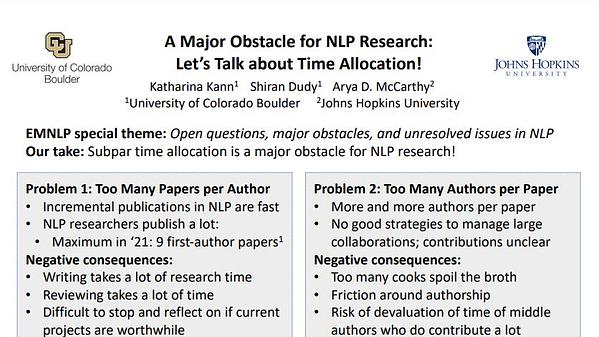

A Major Obstacle for NLP Research: Let's Talk about Time Allocation!

Katherina von der Wense and 2 other authors

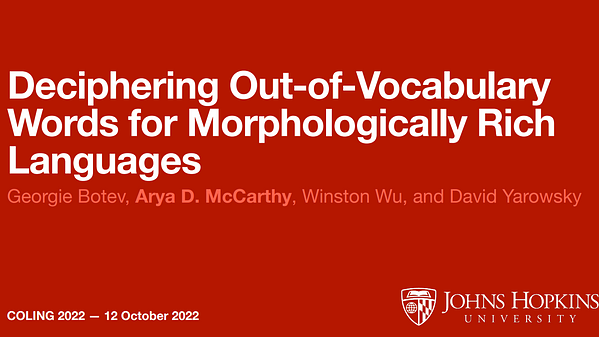

Deciphering and Characterizing Out-of-Vocabulary Words for Morphologically Rich Languages

Arya D. McCarthy and 3 other authors

Morphological Processing of Low-Resource Languages: Where We Are and What’s Next

Adam Wiemerslage and 6 other authors

Pre-Trained Multilingual Sequence-to-Sequence Models: A Hope for Low-Resource Language Translation?

En-Shiun Lee and 6 other authors

Jump-Starting Item Parameters for Adaptive Language Tests

Arya D. McCarthy and 5 other authors

Jump-Starting Item Parameters for Adaptive Language Tests

Arya D. McCarthy and 5 other authors