Yizhong Wang

PhD student @ University of Washington

language models

zero-shot learning

benchmark

few-shot learning

benchmarking

crowdsourcing

knowledge

cross-task generalization

instruction tuning

instruction-based models

vision language models

web agents

temporal alignment

langugage models

4

presentations

1

number of views

SHORT BIO

Yizhong Wang is a 4th-year PhD student at the University of Washington. His current research focuses on building general-purpose models that can follow instructions and generalize across tasks in a zero-shot or few-shot fashion. He is also broadly interested in question answering and knowledge-augmented language models.

Presentations

Set the Clock: Temporal Alignment of Pretrained Language Models

Bowen Zhao and 4 other authors

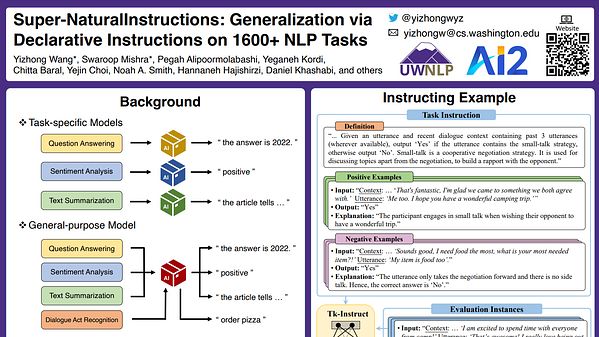

Super-NaturalInstructions: Generalization via Declarative Instructions on 1600+ NLP Tasks

Yizhong Wang

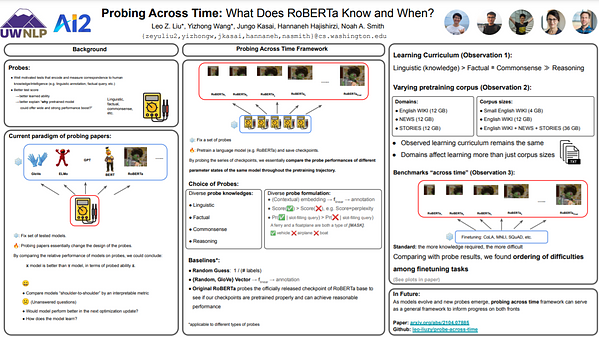

Probing Across Time: What Does RoBERTa Know and When?

Zeyu Liu and 1 other author

Probing Across Time: What Does RoBERTa Know and When?

Zeyu Liu and 1 other author