Minghao Wu

machine translation

instruction tuning

knowledge distillation

social media

hate speech

large language models

online hate speech

document-level machine translation

llms

autoregressive nmt; non-autoregressive nmt; universal pre-training framework

llm

molecular property prediction

document-level neural machine translation

batch-level information

minority groups

11

presentations

2

number of views

SHORT BIO

Minghao Wu is a current Ph.D. student at Monash University.

Presentations

Rewarding What Matters: Step-by-Step Reinforcement Learning for Task-Oriented Dialogue

Huifang Du and 5 other authors

Mixture-of-Skills: Learning to Optimize Data Usage for Fine-Tuning Large Language Models

Minghao Wu and 3 other authors

Demystifying Instruction Mixing for Fine-tuning Large Language Models

Renxi Wang and 7 other authors

Importance-Aware Data Augmentation for Document-Level Neural Machine Translation

Minghao Wu and 4 other authors

LaMini-LM: A Diverse Herd of Distilled Models from Large-Scale Instructions

Minghao Wu and 4 other authors

Document Flattening: Beyond Concatenating Context for Document-Level Neural Machine Translation

Minghao Wu and 3 other authors

Universal Conditional Masked Language Pre-training for Neural Machine Translation

Pengfei Li and 4 other authors

Uncertainty-Aware Balancing for Multilingual and Multi-Domain Neural Machine Translation Training

Minghao Wu and 5 other authors

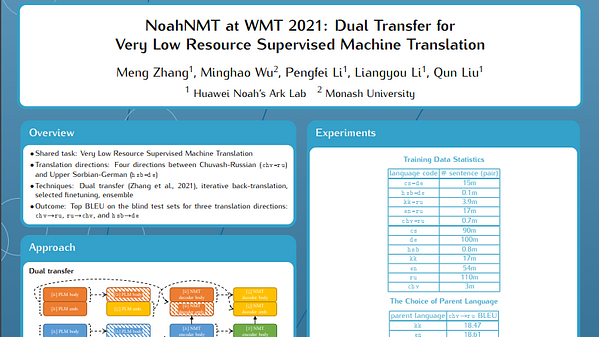

NoahNMT at WMT 2021: Dual Transfer for Very Low Resource Supervised Machine Translation

Meng Zhang and 4 other authors

Uncertainty-Aware Balancing for Multilingual and Multi-Domain Neural Machine Translation Training

Minghao Wu and 5 other authors

TransAgents: Build Your Translation Company with Language Agents

Minghao Wu and 2 other authors