Yulia Tsvetkov

University of Washington

survey

stress test

social media

political communication

llm

agenda-setting

summarization

language generation

framing

text generation

large language models

politics

information warfare

bias

diffusion language models

47

presentations

55

number of views

2

citations

SHORT BIO

I am an assistant professor in the Paul G. Allen School of Computer Science & Engineering, at the University of Washington. I'm also an adjunct professor at the Language Technologies Institute at CMU. I work on Natural Language Processing–a subfield of computer science focusing on computational processing of human languages. I am particularly interested in hybrid solutions at the intersection of machine learning and theoretical or social linguistics, i.e., solutions that combine interesting learning/modeling methods and insights about human languages or about people speaking these languages.

Much of my research group's work focuses on NLP for social good, multilingual NLP, and language generation. This research is motivated by a unified goal: to extend the capabilities of human language technology beyond individual populations and across language boundaries, thereby enabling NLP for diverse and disadvantaged users, the users that need it most.

Previously, I was an assistant professor in the Language Technologies Institute, School of Computer Science at Carnegie Mellon University, and before that a postdoc in the Stanford NLP Group. I got my PhD from CMU.

Presentations

Know Your Limits: A Survey of Abstention in Large Language Models

Bingbing Wen and 6 other authors

Biased LLMs can Influence Political Decision-Making

Jillian Fisher and 8 other authors

CulturalBench: A Robust, Diverse and Challenging Benchmark for Measuring LMs' Cultural Knowledge Through Human-AI Red-Teaming

Yu Ying Chiu and 10 other authors

ComPO: Community Preferences for Language Model Personalization

Sachin Kumar and 4 other authors

ALPACA AGAINST VICUNA: Using LLMs to Uncover Memorization of LLMs

Aly Kassem and 7 other authors

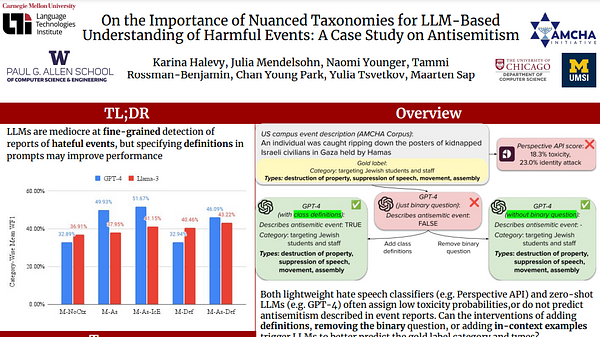

On the Importance of Nuanced Taxonomies for LLM-Based Understanding of Harmful Events: A Case Study on Antisemitism

Karina Halevy and 5 other authors

ValueScope: Unveiling Implicit Norms and Values via Return Potential Model of Social Interactions

Chan Young Park and 6 other authors

Modular Pluralism: Pluralistic Alignment via Multi-LLM Collaboration

Shangbin Feng and 6 other authors

Teaching LLMs to Abstain across Languages via Multilingual Feedback

Shangbin Feng and 8 other authors

Locating Information Gaps and Narrative Inconsistencies Across Languages: A Case Study of LGBT People Portrayals on Wikipedia

Farhan Samir and 4 other authors

Voices Unheard: NLP Resources and Models for Yorùbá Regional Dialects

Orevaoghene Ahia and 7 other authors

Can LLM Graph Reasoning Generalize beyond Pattern Memorization?

Yizhuo Zhang and 6 other authors

Don't Hallucinate, Abstain: Identifying LLM Knowledge Gaps via Multi-LLM Collaboration

Shangbin Feng and 5 other authors

Stumbling Blocks: Stress Testing the Robustness of Machine-Generated Text Detectors Under Attacks

Yichen Wang and 7 other authors

Knowledge Crosswords: Geometric Knowledge Reasoning with Large Language Models

Wenxuan Ding and 6 other authors

DELL: Generating Reactions and Explanations for LLM-Based Misinformation Detection

Herun Wan and 5 other authors