Sang-Woo Lee

NAVER Cloud, NAVER AI Lab, KAIST

in-context learning

document retrieval

pre-training

multi-hop qa

language model

pretraining

machine learning

large scale

bayesian optimization

prompt

questionanswering

large language model

gpt3

opendomainqa

questionretrieval

10

presentations

7

number of views

SHORT BIO

Sangwoo Lee is currently the leader of Language Research team, which is the NLP group of NAVER AI LAB, and the technical leader of Conversation team, which is a NLP modeling group of NAVER CLOVA for chatbots, callbots, and large-scale NLU models. He has also been an adjunct professor at KAIST Kim Jaechul Graduate School of AI (KAIST AI) since Oct 2021. His recent research interests include task-oriented dialogue, large-scale language models (GPT-3 scale), and a variety of other NLP tasks. When he joined Naver in July 2018, he founded and became the first member of DUET, an AI ARS project which is now extended to Contact Center AI (CCAI) project (AiCall, CareCall, …) in Naver and Line.

Presentations

Query-Efficient Black-Box Red Teaming via Bayesian Optimization

Deokjae Lee and 6 other authors

Prompt-Augmented Linear Probing: Scaling Beyond The Limit of Few-shot In-Context Learners

Hyunsoo Cho and 6 other authors

Ground-Truth Labels Matter: A Deeper Look into Input-Label Demonstrations

Junyeob Kim and 7 other authors

On the Effect of Pretraining Corpora on In-context Learning by a Large-scale Language Model

Seongjin Shin and 1 other author

On the Effect of Pretraining Corpora on In-context Few-shot Learning by a Large-scale Language Model

Hwijeen Ahn and 8 other authors

Two-Step Question Retrieval for Open-Domain QA

Juhee Son and 6 other authors

What Changes Can Large-scale Language Models Bring? Intensive Study on HyperCLOVA: Billions-scale Korean Generative Pretrained Transformers

Sang-Woo Lee

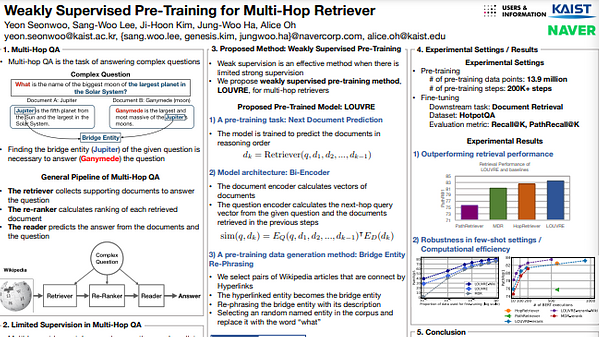

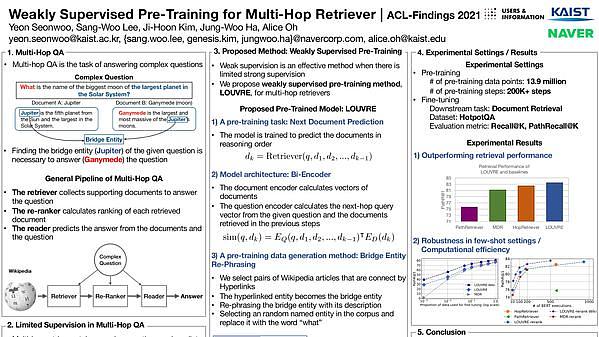

Weakly Supervised Pre-Training for Multi-Hop Retriever

Yeon Seonwoo and 4 other authors

Weakly Supervised Pre-Training for Multi-Hop Retriever

Yeon Seonwoo and 4 other authors

Weakly Supervised Pre-Training for Multi-Hop Retriever

Yeon Seonwoo and 4 other authors