Jack Hessel

syntax

bert

benchmark

privacy

vision and language

distillation

word order

humor

large language model

chain-of-thought

selective prediction; vision-language reasoning; reliability; confidence calibration

9

presentations

39

number of views

SHORT BIO

Jack is a Research Scientist at AI2.

Presentations

Selective "Selective Prediction": Reducing Unnecessary Abstention in Vision-Language Reasoning

Tejas Srinivasan and 6 other authors

Do Androids Laugh at Electric Sheep? Humor "Understanding" Benchmarks from The New Yorker Caption Contest

Jack Hessel and 7 other authors

Symbolic Chain-of-Thought Distillation: Small Models Can Also "Think" Step-by-Step

Liunian Harold Li and 5 other authors

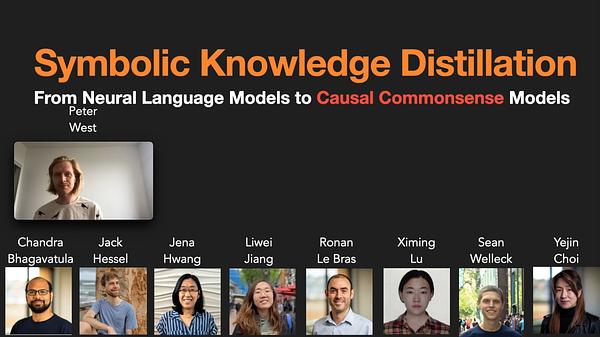

Symbolic Knowledge Distillation: from General Language Models to Commonsense Models

Peter West and 8 other authors

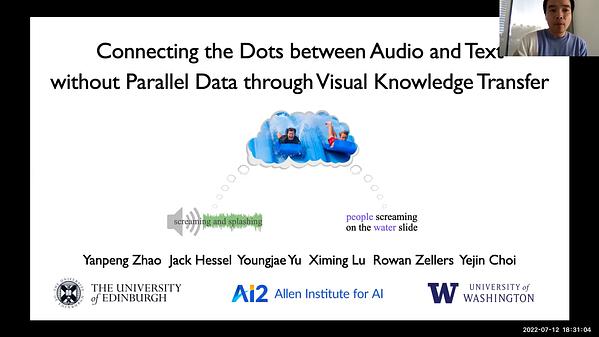

Connecting the Dots between Audio and Text without Parallel Data through Visual Knowledge Transfer

Yanpeng Zhao and 5 other authors

Reframing Human-AI Collaboration for Generating Free-Text Explanations

Sarah Wiegreffe and 4 other authors

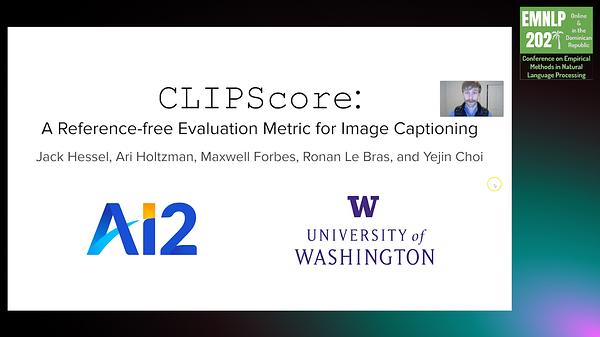

CLIPScore: A Reference-free Evaluation Metric for Image Captioning

Jack Hessel and 4 other authors

CLIPScore: A Reference-free Evaluation Metric for Image Captioning

Jack Hessel and 4 other authors

How effective is BERT without word ordering? Implications for language understanding and data privacy

Jack Hessel and 1 other author