Zhaofeng Wu

MIT

efficiency

document

machine translation

transformers

zero-shot

semantics

meta-learning

few-shot

probing

prompting

finetuning

semantic dependencies

pretrained transformer

efficient methods.

multi-task learning

6

presentations

12

number of views

SHORT BIO

Zhaofeng Wu is a PhD student at MIT CSAIL working with professor Yoon Kim. He was previously a predoctoral researcher at AI2. He obtained his M.S., B.S., and B.A. at the University of Washington, where he worked with the UW NLP group.

Presentations

Continued Pretraining for Better Zero- and Few-Shot Promptability

Zhaofeng Wu and 6 other authors

Modeling Context With Linear Attention for Scalable Document-Level Translation

Zhaofeng Wu

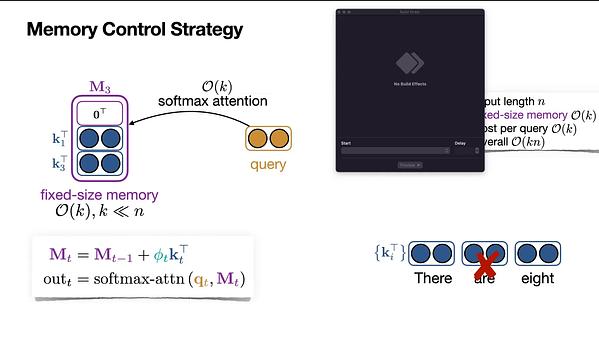

ABC: Attention with Bounded-memory Control

Hao Peng and 7 other authors

Understanding Mention Detector-Linker Interaction in Neural Coreference Resolution

Zhaofeng Wu and 1 other author

Infusing Finetuning with Semantic Dependencies

Zhaofeng Wu and 2 other authors

Modeling Context With Linear Attention for Scalable Document-Level Translation

Zhaofeng Wu and 3 other authors