Genta I Winata

benchmark

low-resource

code-mixing

code-switching

fine-tuning

cross-lingual

named entity recognition

part-of-speech tagging

cross-domain

style

low-resource nlp

equity

efficiency

cross-lingual ability

multilingual pre-trained model

8

presentations

2

number of views

SHORT BIO

I completed my Ph.D. at the Electronic and Computer Engineering Department and Center for AI Research (CAiRE), The Hong Kong University of Science and Technology. My current research interests lie primarily in the area of the Natural Language Understanding, Multilingual, Cross-lingual, Code-Switching, Dialogue, and Speech.

Presentations

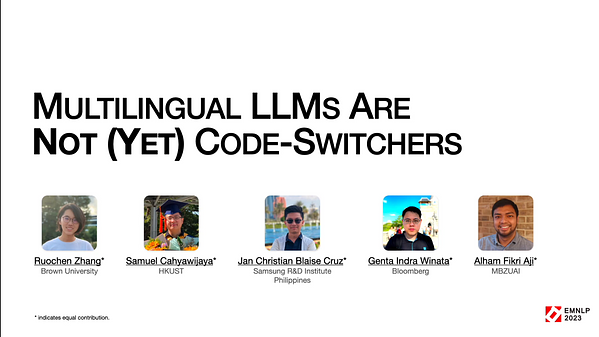

Multilingual Large Language Models Are Not (Yet) Code-Switchers

Ruochen Zhang and 4 other authors

GlobalBench: A Benchmark for Global Progress in Natural Language Processing

Yueqi Song and 10 other authors

One Country, 700+ Languages: NLP Challenges for Underrepresented Languages and Dialects in Indonesia

Alham Fikri Aji and 10 other authors

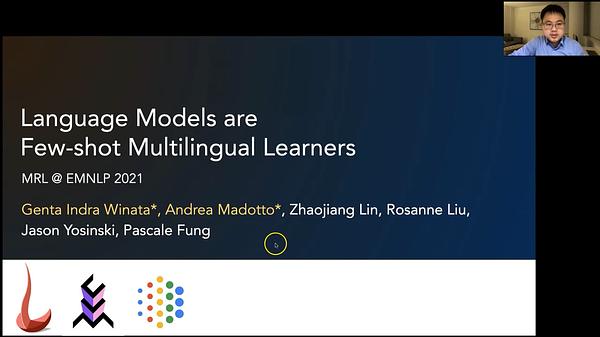

Language Models are Few-shot Multilingual Learners

Genta I Winata

IndoNLG: Benchmark and Resources for Evaluating Indonesian Natural Language Generation

Samuel Cahyawijaya and 11 other authors

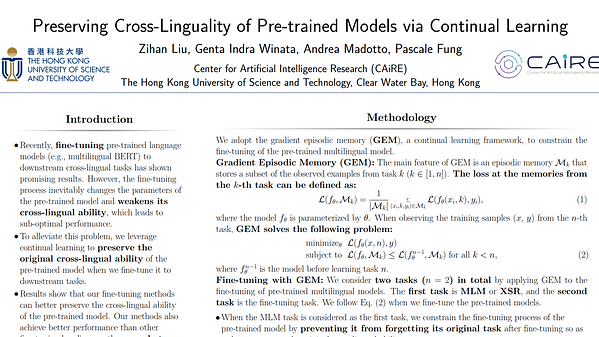

Preserving Cross-Linguality of Pre-trained Models via Continual Learning

Zihan Liu and 3 other authors

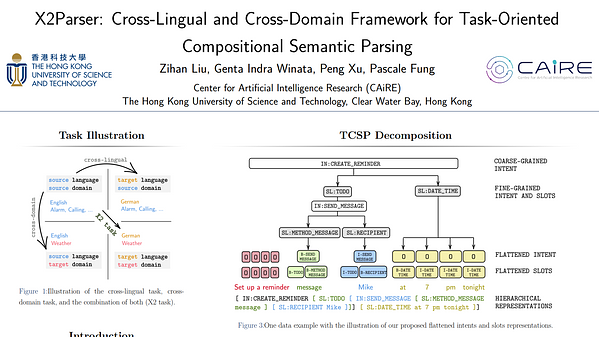

X2Parser: Cross-Lingual and Cross-Domain Framework for Task-Oriented Compositional Semantic Parsing

Zihan Liu and 3 other authors

On the Importance of Word Order Information in Cross-Lingual Sequence Labeling

Zihan Liu and 5 other authors