Yohei Oseki

Associate Professor @ University of Tokyo, Department of Language and Information Sciences, Tokyo, Japan

cognitive modeling

syntax

psycholinguistics

syntactic supervision

language model

computational psycholinguistics

language models

fmri

linguistic typology

linguistics

morphology

composition

eye-tracking

efficiency

attention

11

presentations

19

number of views

Presentations

Can Language Models Induce Grammatical Knowledge from Indirect Evidence?

Miyu Oba and 6 other authors

Tree-Planted Transformers: Unidirectional Transformer Language Models with Implicit Syntactic Supervision

Ryo Yoshida and 2 other authors

Modeling Overregularization in Children with Small Language Models

Akari Haga and 6 other authors

Emergent Word Order Universals from Cognitively-Motivated Language Models

Tatsuki Kuribayashi and 5 other authors

Psychometric Predictive Power of Large Language Models

Tatsuki Kuribayashi and 2 other authors

JBLiMP: Japanese Benchmark of Linguistic Minimal Pairs

Taiga Someya and 1 other author

How Much Syntactic Supervision is "Good Enough"?

Yohei Oseki and 1 other author

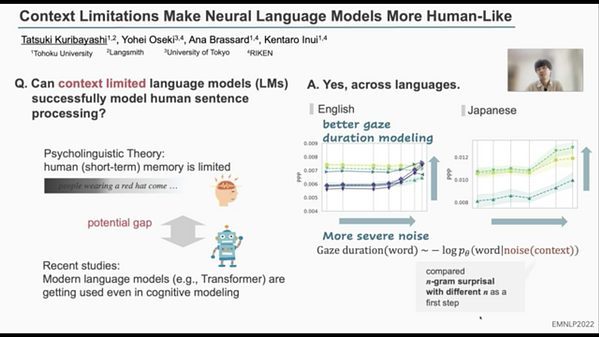

Context Limitations Make Neural Language Models More Human-Like

Tatsuki Kuribayashi and 3 other authors

Effective Batching for Recurrent Neural Network Grammars

Hiroshi Noji and 1 other author

Lower Perplexity is Not Always Human-Like

Tatsuki Kuribayashi and 5 other authors

Composition, Attention, or Both?

Ryo Yoshida and 1 other author