Joel Jang

continual learning

large language models

privacy

reasoning

instruction following

pretrained language models

lifelong learning

multilingualism

knowledge acquisition

prompt tuning

temporal adaptation

large language model

temporal misalignment

lifelong benchmark

unlearning

9

presentations

3

number of views

1

citations

SHORT BIO

Hello! I am a second-year M.S. & Ph.D. (Integrated) Student at the Language & Knowledge Lab at KAIST, advised by Minjoon Seo. My main research goal is to build large neural models that are applicable to real-world scenarios, by addressing the inherent limitations of current neural models.

Presentations

LangBridge: Multilingual Reasoning Without Multilingual Supervision

Dongkeun Yoon and 5 other authors

Semiparametric Token-Sequence Co-Supervision

Hyunji Amy Lee and 6 other authors

How Well Do Large Language Models Truly Ground?

Hyunji Amy Lee and 6 other authors

Efficiently Enhancing Zero-Shot Performance of Instruction Following Model via Retrieval of Soft Prompt

Seonghyeon Ye and 4 other authors

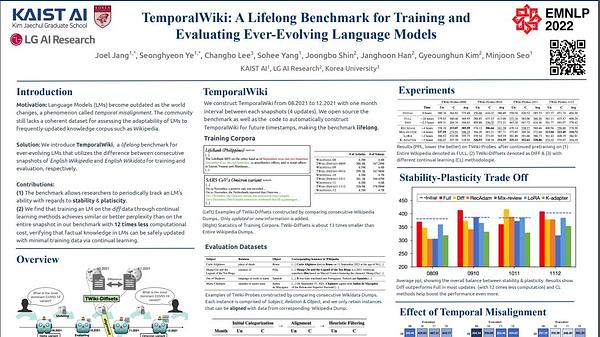

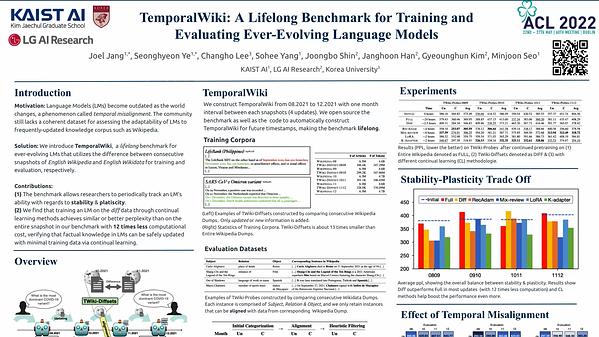

TemporalWiki: A Lifelong Benchmark for Training and Evaluating Ever-Evolving Language Models

Joel Jang

Knowledge Unlearning for Mitigating Privacy Risks in Language Models

Joel Jang

TemporalWiki: A Lifelong Benchmark for Training and Evaluating Ever-Evolving Language Models

Joel Jang

Towards Continual Knowledge Learning of Language Models

Joel Jang

TemporalWiki: A Lifelong Benchmark for Training and Evaluating Ever-Evolving Language Models

Joel Jang