Yonatan Belinkov

interpretability

fairness

concept removal

text-to-image

multimodal

explainability

model editing

bias

privacy

information retrieval

transformers

natural language processing

benchmarking

nli

multilingual

21

presentations

19

number of views

SHORT BIO

Yonatan Belinkov is an assistant professor at the Henry and Merilyn Taub Faculty of Computer Science in the Technion. He has previously been a Postdoctoral Fellow at the Harvard School of Engineering and Applied Sciences and the MIT Computer Science and Artificial Intelligence Laboratory. His current research focuses on interpretability and robustness of neural network models of human language. His research has been published at various NLP/ML venues. His PhD dissertation at MIT analyzed internal language representations in deep learning models, with applications to machine translation and speech recognition. He has been awarded the Harvard Mind, Brain, and Behavior Postdoctoral Fellowship and is currently an Azrieli Early Career Faculty Fellow.

Presentations

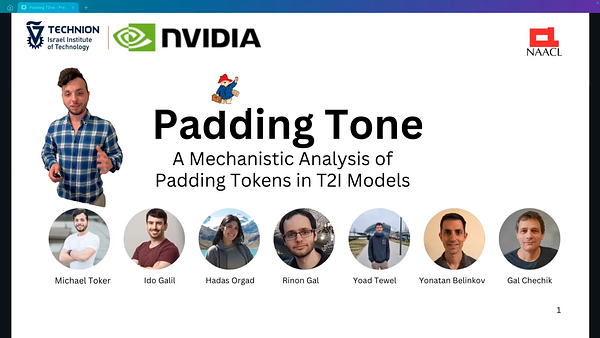

Padding Tone: A Mechanistic Analysis of Padding Tokens in T2I Models

Michael Toker and 6 other authors

Backward Lens: Projecting Language Model Gradients into the Vocabulary Space

shachar katz and 3 other authors

Fast Forwarding Low-Rank Training

Adir Rahamim and 3 other authors

Diffusion Lens: Interpreting Text Encoders in Text-to-Image Pipelines

Michael Toker and 4 other authors

Leveraging Prototypical Representations for Mitigating Social Bias without Demographic Information

Shadi Iskander and 2 other authors

ReFACT: Updating Text-to-Image Models by Editing the Text Encoder

Dana Arad and 2 other authors

ContraSim – Analyzing Neural Representations Based on Contrastive Learning

Adir Rahamim and 1 other author

Generating Benchmarks for Factuality Evaluation of Language Models

Dor Muhlgay and 9 other authors

A Dataset for Metaphor Detection in Early Medieval Hebrew Poetry

Michael Toker and 4 other authors

VISIT: Visualizing and Interpreting the Semantic Information Flow of Transformers

Shahar Katz and 1 other author

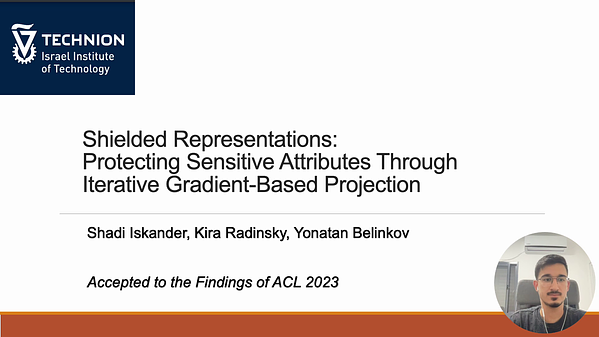

Shielded Representations: Protecting Sensitive Attributes Through Iterative Gradient-Based Projection

Shadi Iskander and 2 other authors

Shielded Representations: Protecting Sensitive Attributes Through Iterative Gradient-Based Projection

Shadi Iskander and 2 other authors

What Are You Token About? Dense Retrieval as Distributions Over the Vocabulary

Ori Ram and 5 other authors

Shielded Representations: Protecting Sensitive Attributes Through Iterative Gradient-Based Projection

Shadi Iskander and 2 other authors

Emergent Quantized Communication

Boaz Carmeli and 2 other authors

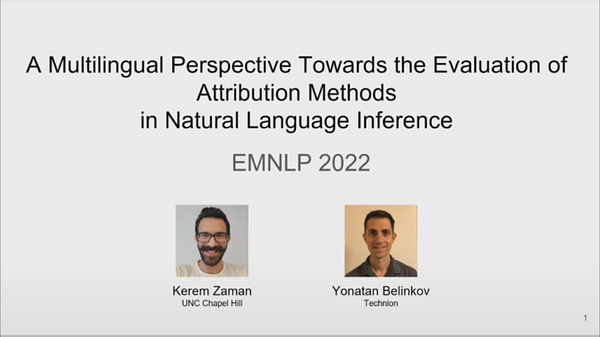

A Multilingual Perspective Towards the Evaluation of Attribution Methods in Natural Language Inference

Kerem Zaman and 1 other author