Matthew E Peters

language modeling

data augmentation

contrastive

controlled generation

meta-learning

few-shot learning

question answering

interpretability

language model

generation

language grounding

neuro-symbolic

controllable text generation

explainability

domain adaptation

16

presentations

34

number of views

1

citations

Presentations

TESS: Text-to-Text Self-Conditioned Simplex Diffusion

Rabeeh Karimi mahabadi and 6 other authors

HINT: Hypernetwork Instruction Tuning for Efficient Zero- and Few-Shot Generalisation

Hamish Ivison and 4 other authors

FiD-ICL: A Fusion-in-Decoder Approach for Efficient In-Context Learning

Qinyuan Ye and 4 other authors

Peek Across: Improving Multi-Document Modeling via Cross-Document Question-Answering

Avi Caciularu and 4 other authors

AdapterSoup: Weight Averaging to Improve Generalization of Pretrained Language Models

Alexandra Chronopoulou and 3 other authors

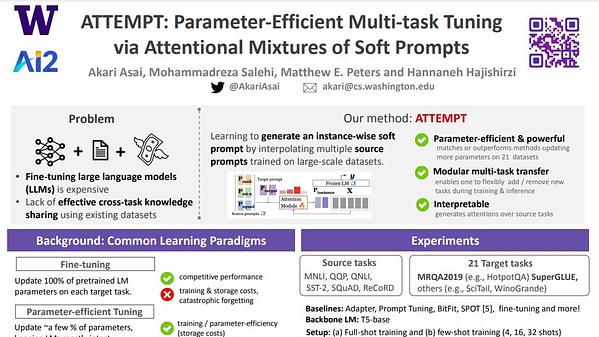

ATTEMPT: Parameter-Efficient Multi-task Tuning via Attentional Mixtures of Soft Prompts

Akari Asai and 3 other authors

Few-Shot Self-Rationalization with Natural Language Prompts

Ana Marasovic and 3 other authors

Efficient Hierarchical Domain Adaptation for Pretrained Language Models

Alexandra Chronopoulou and 2 other authors

Tailor: Generating and Perturbing Text with Semantic Controls

Alexis Ross and 4 other authors

Extracting Latent Steering Vectors from Pretrained Language Models

Nishant Subramani and 2 other authors

Lt. Gen. Matthew G. Glavy, Deputy Commandant for Information, USMC

Matthew E Peters

CDLM: Cross-Document Language Modeling

Avi Caciularu and 5 other authors

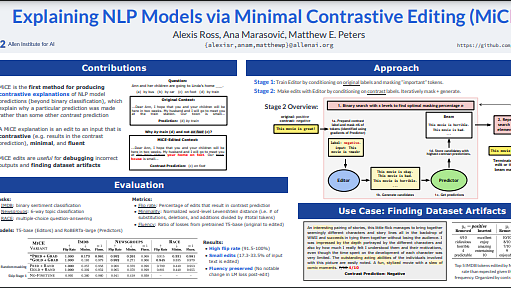

Explaining NLP Models via Minimal Contrastive Editing (MiCE)

Alexis Ross and 2 other authors

CDLM: Cross-Document Language Modeling

Avi Caciularu and 5 other authors

PIGLeT: Language Grounding Through Neuro-Symbolic Interaction in a 3D World

Yejin Choi and 5 other authors

Explaining NLP Models via Minimal Contrastive Editing (MiCE)

Alexis Ross and 2 other authors