Mehdi Rezagholizadeh

knowledge distillation

robustness

bert

nlu

data augmentation

bias

federated learning

domain adaptation

personalization

pretrained language models

ner

evaluation

adversarial

transformers

multilingual

22

presentations

37

number of views

Presentations

MIRACL: A Multilingual Retrieval Dataset Covering 18 Diverse Languages

Xinyu Zhang and 8 other authors

Evaluating Embedding APIs for Information Retrieval

Ehsan Kamalloo and 6 other authors

LABO: Towards Learning Optimal Label Regularization via Bi-level Optimization

Peng Lu and 4 other authors

Attribute Controlled Dialogue Prompting

Runcheng Liu and 4 other authors

Practical Takes on Federated Learning with Pretrained Language Models

Ankur Agarwal and 2 other authors

Practical Takes on Federated Learning with Pretrained Language Models

Ankur Agarwal and 2 other authors

Towards Fine-tuning Pre-trained Language Models with Integer Forward and Backward Propagation

Mohammadreza Tayaranian and 5 other authors

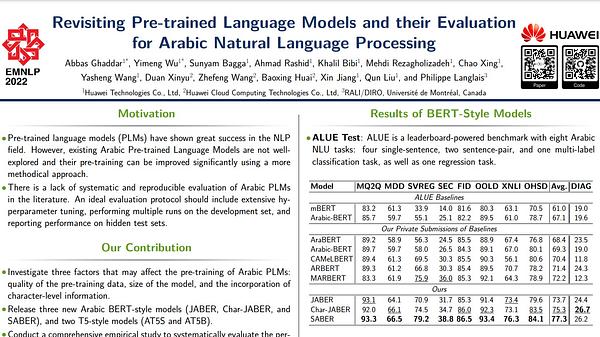

Revisiting Pre-trained Language Models and their Evaluation for Arabic Natural Language Processing

Yimeng Wu and 13 other authors

CILDA: Contrastive Data Augmentation using Intermediate Layer Knowledge Distillation

Khalil Bibi and 5 other authors

RAIL-KD: RAndom Intermediate Layer Mapping for Knowledge Distillation

Abbas Ghaddar and 5 other authors

KroneckerBERT: Significant Compression of Pre-trained Language Models Through Kronecker Decomposition and Knowledge Distillation

Marzieh Tahaei and 4 other authors

Towards Zero-Shot Knowledge Distillation for Natural Language Processing

Ahmad Rashid and 3 other authors

Towards Zero-Shot Knowledge Distillation for Natural Language Processing

Ahmad Rashid and 3 other authors

Universal-KD: Attention-based Output-Grounded Intermediate Layer Knowledge Distillation

Yimeng Wu and 4 other authors

How to Select One Among All ? An Empirical Study Towards the Robustness of Knowledge Distillation in Natural Language Understanding

Tianda Li and 5 other authors

Universal-KD: Attention-based Output-Grounded Intermediate Layer Knowledge Distillation

Yimeng Wu and 4 other authors